Premise

Software to track your posture and alert you when it’s wrong, so you can stay away from negative health issues when working from home.

Synopsis

More and more people are working from home and with covid-19 the amount of people that do so only accelerates. Working from home is linked to back and neck pains caused by a wrong posture when sitting behind a desk. By researching posture tracking technology, I created a web app. This web app helps people to keep the right posture by alerting them when their posture is wrong.

Introduction & context

Working from home is not new, but it happens more and more. The covid-19 pandemic accelerated this process even more. According to an analysis by McKinsey we can see that in western countries roughly 50% of the jobs could be done with at least 2 days remote work without loss of productivity (Lund et al., 2021).

Working from home has great benefits for both employers as employees. Employers save on average 1% of employee costs per day working from home on saved costs for office space, gas and electricity (PwC, 2020). It also seems to increase employee productivity. Employees in return get amongst others more flexibility in work hours, less commute stress and location independence. Even the environment benefits from the reduction in both office space as reducing commuter travel emissions (Courtney, 2021a).

So, it seems working from home has only upsides for everybody involved, but there is a downside that could affect both the employees as employers in the long run. As working from home also brings physical and mental health issues. As a big part of the increased productivity is caused by losing the work-life balance and increasing work hours (Martin, 2020).

When focusing on physical health, according to (McDowell et al., 2020) working from home causes people to:

- Spend more time sitting

- More time behind screens

- Decrease in physical activity

These changes cause people not only to sit longer but also in a wrong position according to a recent study 41,2% of people forced to work from home reported lower back issues and 23,5% reported neck pain (Moretti et al., 2020, p. 6284). This is usually because our home offices are not set up for long working days (Gangopadhyay, 2020, p. 14).

These problems become more and more relevant when companies decide to make this a long-term habit by prolonging the amount of work from home days after the Covid-19 pandemic is contained. In a survey McKinsey conducted before the pandemic with corporate executives only 22% of the respondents said they expected their employees to work more than 2 days at home. In a recent rerun of the survey this changed to 38% with half of them (19% of total) even expecting their employees to work 3 or more days from home after the pandemic (Lund et al., 2020). More recently 18 out of 23 big and famous companies that currently work from home already announced to switch to 50% or even permanent work from home after the pandemic (Courtney, 2021b).

Based on this I decided I wanted to research how I could improve the physical health for people working from home with modern day technology.

Research question:

How could technology help you improve your physical health during long working hours from home?

Process

After creating the problem space, the process that followed is the creative critical making process. Critical making relies on a constructionist methodology that emphasizes the materiality of knowledge making and sharing (Ratto, 2011, p. 257). This creative process took 4 weeks from 3 March till 31 March 2021. In this process I started with an initial idea and build a prototype. By evaluating, connecting and growing this prototype each week, it evolved bit by bit in a more sophisticated prototype. This is not an end product but still remains a prototype. In this chapter I describe the steps I took, the challenges I faced and how I overcome those in my creative process.

Brainstorm

I broke down the problem of physical health in two areas. As one of the problems is caused by a wrong posture and one is caused by not taking (enough) breaks or going on for too long. Based on these I imagined how technology could ‘sense’ what was going on. I ended up with two feasible possibilities.

- Build weight sensors in a desk mat to detect breaks and possibly position on weight distribution.

- Use the webcam of the computer where the user is working on to detect changes in posture and possibly breaks.

Next I also tried to imagine how technology could help the user to stimulate positive behaviour.

- Adjust seat and desk for optimal posture

- Connect with Microsoft Outlook to schedule breaks when needed

- Via the computer they are working on to alert the user

- Via a physical object, e.g. haptic in the chair or visual/sound on the desk

As I didn’t have a specific preference on the possibilities, I started with the webcam and computer, as I already had access to both.

Exploring technologies

There is already a lot done on pose estimation, based on some advice I was looking around to different Python connected solutions. We already had Python in 2 previous courses, so this seemed to be the easiest first step to make. Most projects I came across used the OpenCV (Open Source Computer Vision Library) toolkit and TensorFlow (sometimes in combination with Keras) for deep learning in classifying specific poses or tracking faces.

This is also where I ran into my first challenge. When installing the needed packages to run the existing programs I found at GitHub with these techniques my Visual Code and later Spyder as well kept prompting errors that packages where not installed but when installing the request was already satisfied. Tried it on my other computer and there it didn’t prompt errors, but that one didn’t have a webcam, so I was unable to check if it actually worked.

While searching for solutions across the web I stumbled upon p5.js a library for beginner coding in JavaScript based on processing (p5.js, 2021). Which you could via ml5.js easily connect to PoseNet a machine learning model to detect poses. After following a tutorial, I was able to track my nose and worked perfectly. I had found my technique.

Detecting detailed changes

In most examples I found PoseNet was used for detecting full body poses. I was trying to detect small changes to see if the user was bending his back or neck. Out of the 17 different keypoints that PoseNet can track the first 7 are visible when sitting behind a desk: the nose, both eyes, ears and shoulders.

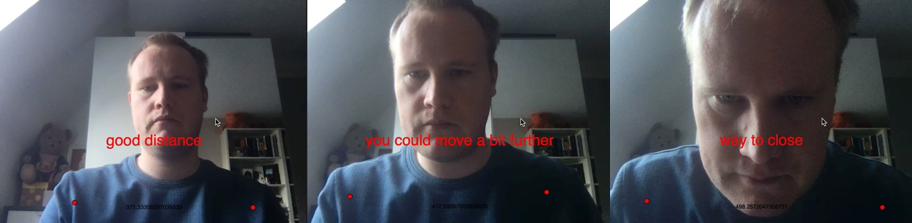

I started with tracking my shoulders. By moving closer and further away you could see the distance increase and decrease. So, I also measured the distance between them. Then I found some info on the right posture and how far you should sit from the screen (Ergotron, 2021). I measured myself 50 cm of the screen wrote down the distance between my shoulders and hardcoded this in my program.

Using an if-else statement to give feedback based on the distance, I had my first working prototype.

Although it was working it was far from perfect, biggest issue: The tracking wasn’t flawless and therefor it was jumping in states when it shouldn’t.

The tracking was really fast, it could jump between a few spots around a keypoint within milliseconds. Possible way to solve this is taking multiple tracking points and calculating the mean to get a more smoothened tracking. I remembered stumbling across this in an earlier tutorial where this could be solved quite easily with the linear interpolation function. I fill this with the first/current estimation and add the new position. It calculates and returns the point exactly in the middle between the two.

Another idea was that shoulders weren’t maybe the best keypoints to track. I went to search what are the best keypoints and distances to track.

What to track

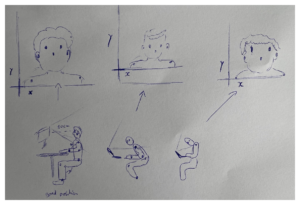

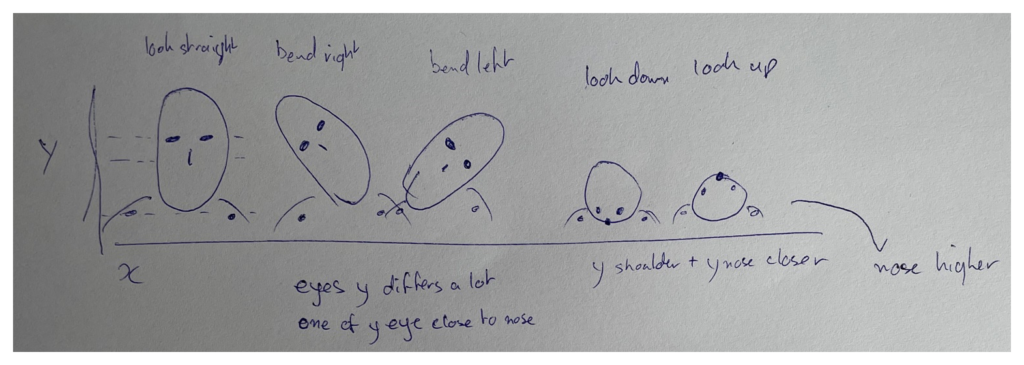

I started with sketching how the keypoints changed in different poses. From the sketches it was clear it’s all about minor changes, but eyes and nose are possibly reliable keypoints to track.

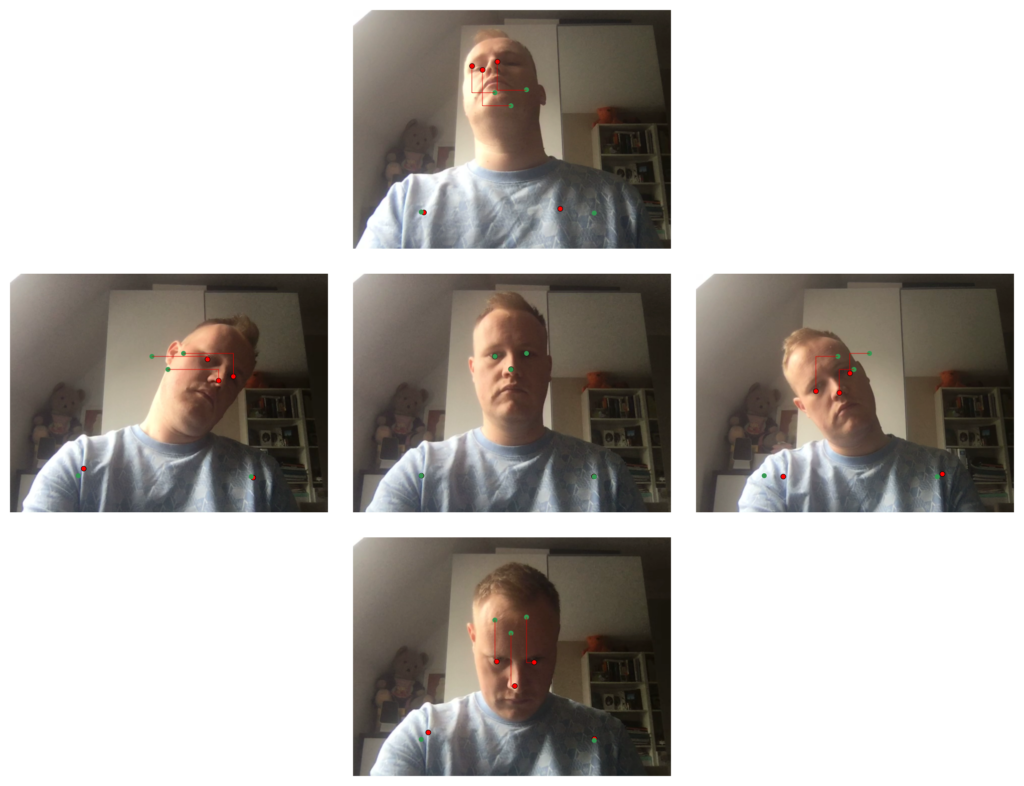

I recreated my sketch in the prototype by starting to track my eyes and nose. Screenshot each of the poses and manually draw how the keypoints chance from the original pose.

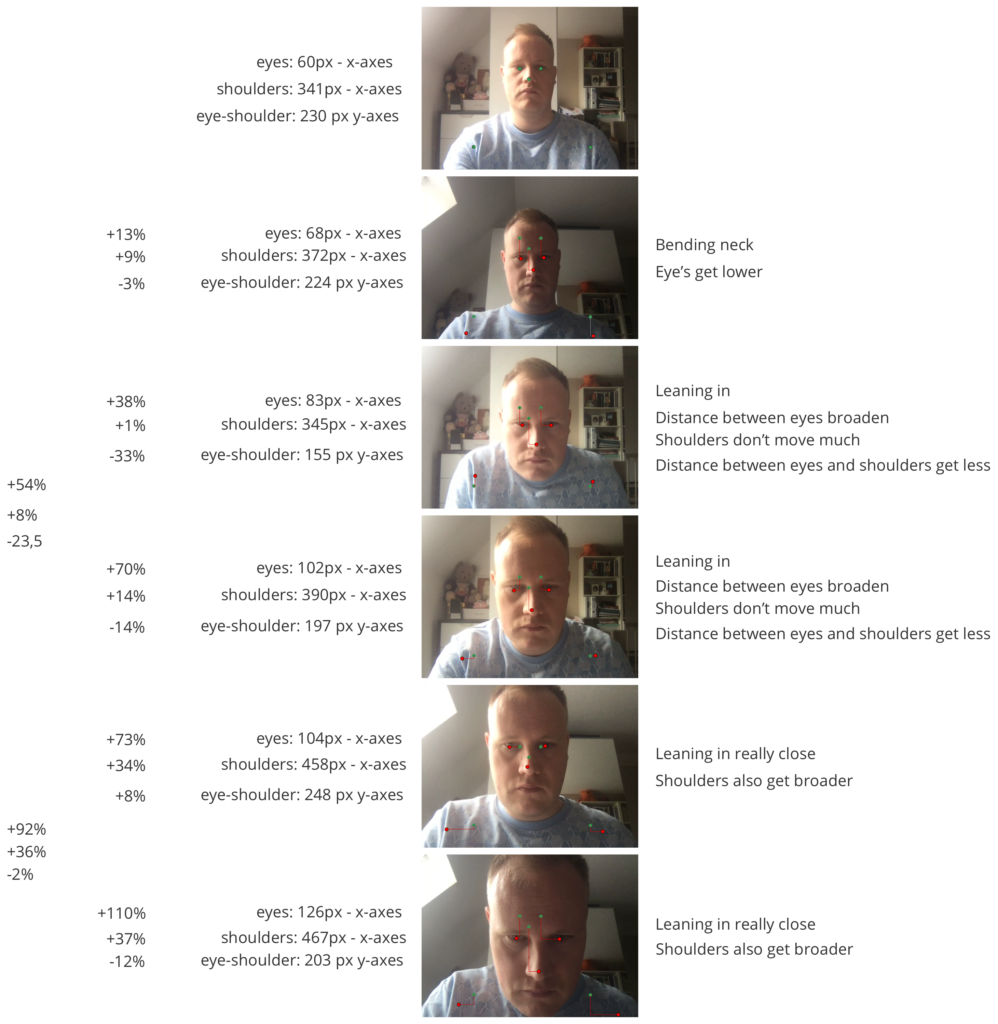

The choice for eyes and nose seemed to be a good choice. The number of possible poses to track is enormous. But as I want to focus on working poses it will be mostly bending the neck or leaning in. I recreated this experiment with those specific poses and manually calculated some distances and changes in distances. It starts with a good pose and then changes with on the left calculations of the different distances and changes in percentages and even more to the left average percentages.

Eyes definitely seemed more conclusive than shoulders, but I felt I need even more certainty, as individual percentages differ a lot. So, I build the tracking of all 3 distances in the prototype. I also wrote a script to do two things:

- Make a screenshot every minute (due to the enormous CPU/memory usage this was in the end more around every 2,5 minute)

- At the same time write the distances to a table, I could download as a .csv file

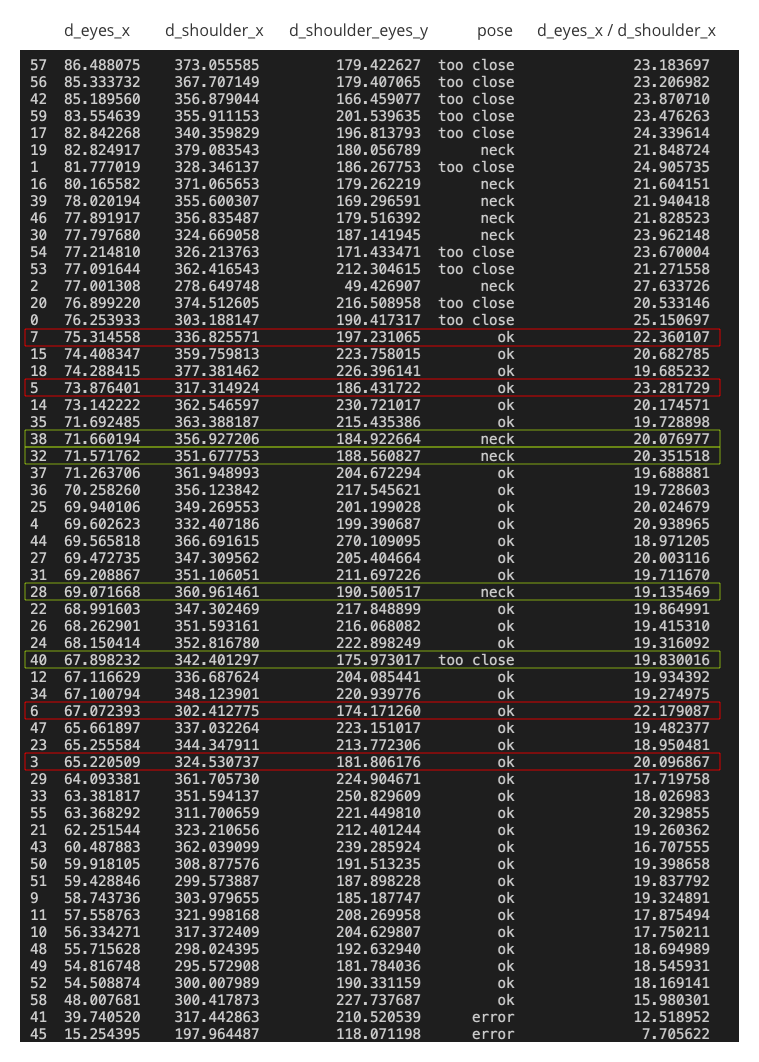

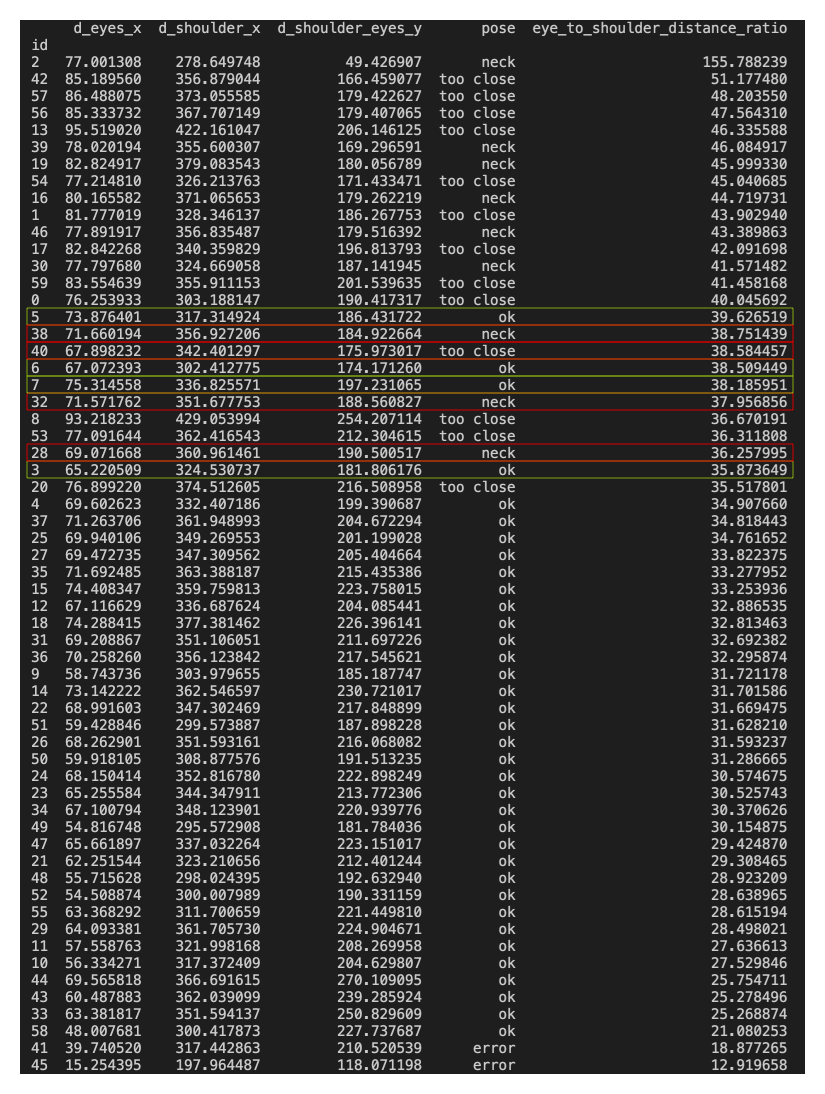

During 2 hours of meetings for work I also ran the prototype in the background. I ended up with 59 screenshots and the result in a table. I manually added to this table 4 classes: ‘ok’, ‘neck’, ‘too close’, ‘error’. I loaded the table in Python and tried different sorting and calculation options. Ended up with 2 quite distinctive features:

First table is the distance on the x-axes between the left and right eye. In the second table I ended up with a calculation: the x-axes distance between the eyes divided by the y-axes distance between the shoulder and eyes times 100 ((d_eyes_x/d_shoulder_eyes_y)*100). There was still some classification that wasn’t filtered out. When checking these screenshots again I could live with the wrong classification as I did have bit of bend neck in these cases but mostly because leaning to a side. This could be catched by tracking the x-axes distance of the nose between the two shoulders.

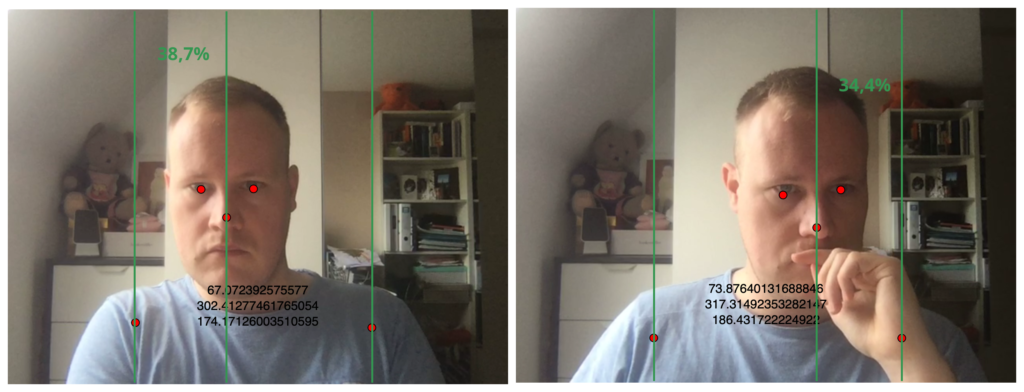

Screenshot 6 and 5, with added lines and calculation on the relative shoulder-nose-shoulder distance.

Switching to relative distance

In the prototype I used hardcoded numbers for my specific case. To allow other users to test and improve their position I needed to adapt for their situation. I want to do this by capturing their ‘good’ position and measure the change in percentages. In the new prototype I added a button to capture this distance. As we had the smoothing issue earlier, I wanted to avoid that again. Instead of capturing two distances and use linear interpolation again I wanted more certainty. The button now captured the distances for a full second in an array, and by dividing those with the length of the array I got the mean distance of the initial ‘good’ pose.

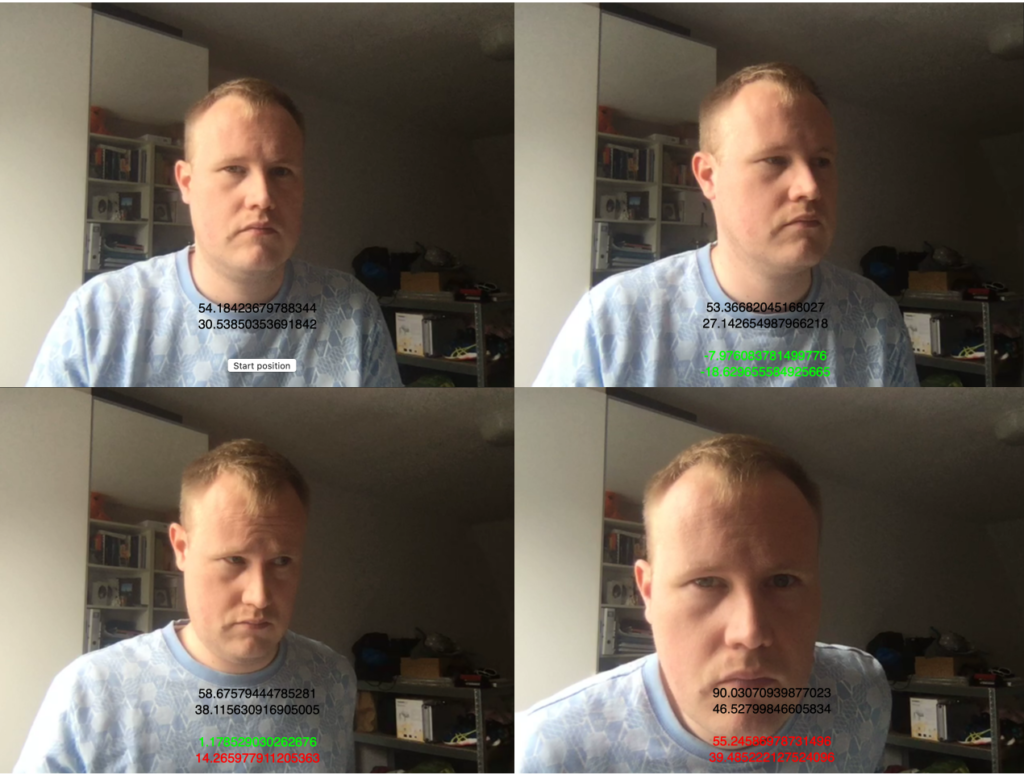

Left top: Screen with button to set on a good position, right top: a good pose (both percentages are down to the set pose), left bottom: bend neck (the shoulder-to-eye distance on the y-axes is 14% higher than starting, but x-axes distance between eyes are just 1% higher), bottom right: too close (both percentages are way up from the original pose).

I ran the prototype with displaying the differences in percentages from the original capture to define thresholds. I have the following thresholds and states now:

- 10% increase in shoulder to eye y-axes distance and less than 8% increase in x-axes distance between the eyes themselves: bending neck

- 8% increase in x-axes distance between eyes: danger zone

- 18% increase in x-axes distance between eyes and more than 12% increase in shoulder to eye y-axes distance: too close

Everything else is seen as a good pose.

Improved feedback

These thresholds have a specific point to jump to a next state. The problem is that around the point of 8% increase in x-axes distance between the eyes, it still switches a lot between states and the same on the 18% increase threshold. This creates a lot of state changes in a short time, which again means a lot of changes in the visual feedback.

In this video you see how the feedback changes rapidly between poses:

I wanted to change that so that if a state stays the same for a short amount of time the feedback is activated. P5 js doesn’t have a timing function to solve this, but I found a way to do this with the modulus operator, which I also used in the screenshot scenario. I first tried this on the millis() function. This function returns how many milliseconds the program is running if every count of times 100 is deducted. If this equals 0 it executed a function to save the current state and check if it is the same state as the previous run.

In theory this should run the function every 100 milliseconds (e.g. at 2000, 2100, 2200, etc.). If two of the states in a row would be the same the feedback would change. In practice the code was running in a loop and it took some milliseconds to run. Therefor the chance it exactly reached a by 100 dividable amount at the exact moment of reaching the if statement was very small.

I found I could also do this every x frames, by default the program runs at 60 frames per second. This could also be lower if the program takes up a lot of cpu or memory to run. When the prototype gets to big it would result in the same problems as the milliseconds, but I gave it a try and it seems to work.

I now check every 6 frames (roughly 100ms), if the latest reading is the same as 6 frames earlier. If both are the same the pose state with the according feedback changes and new feedback is given. If they don’t change the pose and therefor the feedback stays the same.

In this video tested it with my wife (for checking relative distance) and on the top you can see the first 2 checks and when they are the same the 3rd (which is the feedback pose) also changes together with the feedback in the screen.

Feedback changes with double check

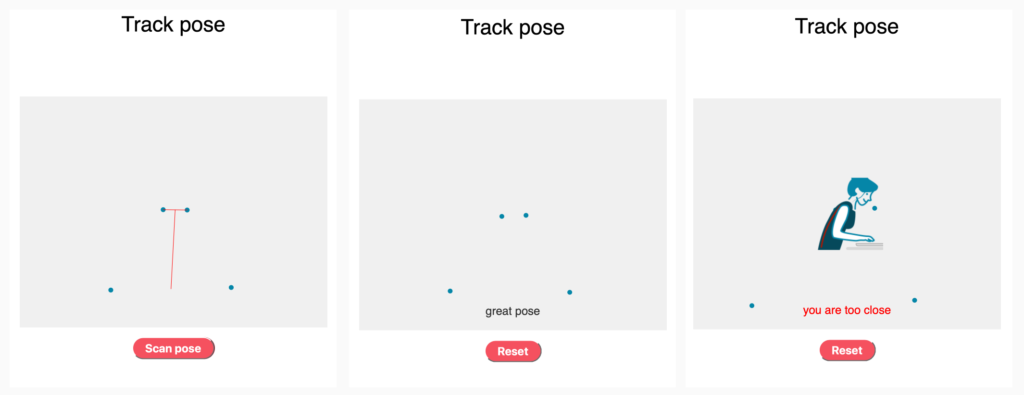

Feeling of privacy

The prototype uses your webcam input, which is video, but with feeling recorded for a full day had negative impact on your feeling of privacy. So instead of giving back what the prototype takes as input, I altered the visualization to give back what we actually use from the input the tracking of the points.

Conclusion and next steps

I started with the research question: “How could technology help you improve your physical health during long working hours from home?”. In the first few weeks this got narrowed down to addressing posture with a combination of p5.js and ml5.js libraries as technology.

In roughly one week I had a first prototype that already proved it was possible, in the 3 weeks that followed I learned a lot on how to iterate and perfect this prototype to both sharpen the detection of a posture and improving feedback to the user.

One of the biggest challenges remained that the differences in a good posture and a bad posture are relatively small from the angle the prototype tracks the posture. I know this could be solved by changing the angle and distance I track posture from, but my aim was to also have a usable set-up. This is a prototype that could help people with the equipment they already have at home.

Next steps

One of the big improvements that would be needed is testing or data collection with a larger audience. To make this possible, the prototype could improve in a more usable set up, where a user could just use a website to set up a right position and keep tracking their posture.

The prototype now relies on visual feedback in the browser. This could be extended to make it able to run the prototype in the background. Working with sound is a possibility, but as the user might be in a meeting a connection with a physical object on the user’s desk (e.g. with the Arduino) would be another next step.

Last but not least is that it can feel like this program is invading the privacy of the user, you need your webcam active for the full working day. I already tried altering the visualization, it should be researched if this actually helps. Next to this possibilities and other techniques to collect data input could be researched as well.

Links

Collection of the prototype in different stages: https://editor.p5js.org/freekplak/collections/sCUtKNyzM

Try it yourself: https://www.freekplak.nl/posture_tracking/

Literature

Courtney, E. (2021a, februari 25). The Benefits of Working From Home: Why The Pandemic Isn’t the Only Reason to Work Remotely. FlexJobs Job Search Tips and Blog. https://www.flexjobs.com/blog/post/benefits-of-remote-work/

Courtney, E. (2021b, maart 2). 23 Companies Switching to Long-Term Remote Work. FlexJobs Job Search Tips and Blog. https://www.flexjobs.com/blog/post/companies-switching-remote-work-long-term/

Ergotron. (2021). Make Ergonomics Simple: Tips for Adding Ergonomics to your Computing. https://www.ergotron.com/en-us/ergonomics/ergonomic-equation

Gangopadhyay, S. (2020). Invited Lecture 12: Ergonomics and Health: Working from Home under COVID 19. BLDE University Journal of Health Sciences, 5(3), 14. https://doi.org/10.4103/2468-838x.303751

Lund, S., Cheng, W., Dua, A., De Smet, A., Robinson, O., & Sanghvi, S. (2020, 17 november). What 800 executives envision for the postpandemic workforce. McKinsey & Company. https://www.mckinsey.com/featured-insights/future-of-work/what-800-executives-envision-for-the-postpandemic-workforce

Lund, S., Madgavkar, A., Manyika, J., & Smit, S. (2021, 3 maart). What’s next for remote work: An analysis of 2,000 tasks, 800 jobs, and nine countries. McKinsey & Company. https://www.mckinsey.com/featured-insights/future-of-work/whats-next-for-remote-work-an-analysis-of-2000-tasks-800-jobs-and-nine-countries

Martin, M. (2020, 27 maart). 20 Unexpected Health Problems When Working From Home. Eat This Not That. https://www.eatthis.com/unexpected-health-problems-when-working-from-home/

McDowell, C. P., Herring, M. P., Lansing, J., Brower, C., & Meyer, J. D. (2020). Working From Home and Job Loss Due to the COVID-19 Pandemic Are Associated With Greater Time in Sedentary Behaviors. Frontiers in Public Health, 8, 0. https://doi.org/10.3389/fpubh.2020.597619

Moretti, A., Menna, F., Aulicino, M., Paoletta, M., Liguori, S., & Iolascon, G. (2020). Characterization of Home Working Population during COVID-19 Emergency: A Cross-Sectional Analysis. International Journal of Environmental Research and Public Health, 17(17), 6284. https://doi.org/10.3390/ijerph17176284

PwC. (2020). The costs and benefits of working from home. https://www.pwc.nl/nl/actueel-publicaties/assets/pdfs/pwc-the-costs-and-benefits-of-working-from-home.pdf

Ratto, M. (2011). Critical Making: Conceptual and Material Studies in Technology and Social Life. The Information Society, 27(4), 252–260. https://doi.org/10.1080/01972243.2011.583819