PREMISE

Using a chatbot to increase awareness of the gender bias in the text translated by translation machines

SYNOPSIS

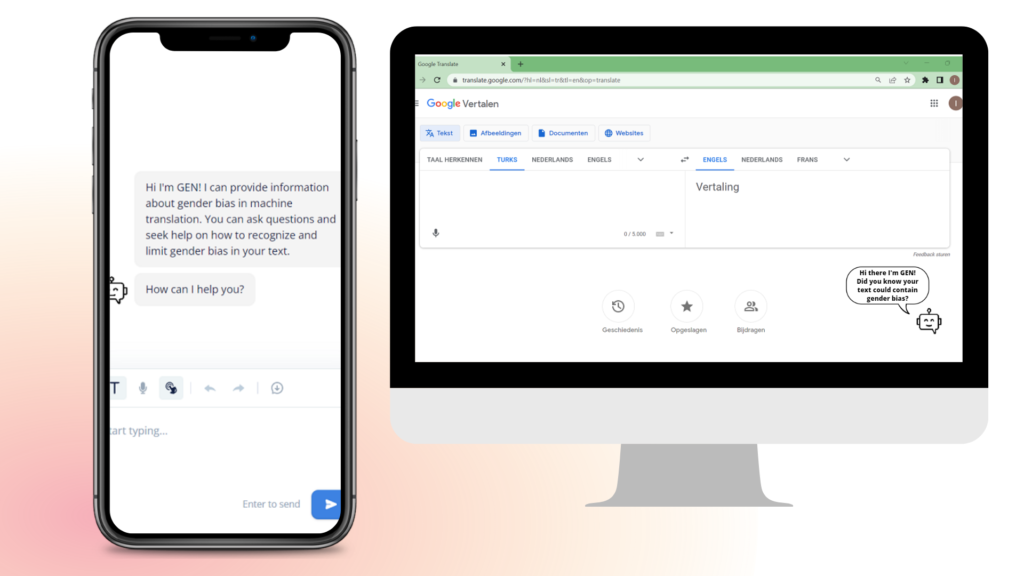

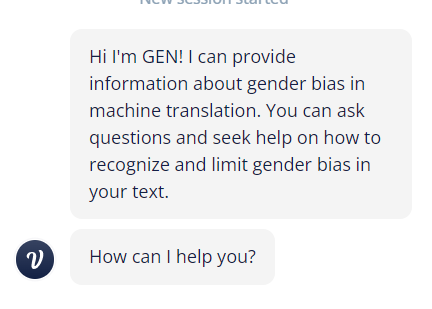

Gender bias in machine translation is a critical issue that doesn’t receive sufficient attention in my opinion, and the project aims to address this gap. The project aims to address this issue of gender bias in machine translation since most translation machine users are not aware of this bias in their translater text. By creating a chatbot prototype it is aimed to inform those users about this problem. The chatbot is designed to create awareness, inform and educate users about gender bias in machine translation and how it can perpetuate gender stereotypes.

The need to address gender bias in machine translation is critical as it can impact the perception of gender roles and reinforce negative stereotypes. Therefore, it is important to equip users of these tools with the knowledge and awareness to identify and mitigate gender bias. The project’s target audience is individuals who use machine translation tools, particularly students who are likely to encounter the issue in their academic work.

To create the chatbot prototype, the project used Voice Flow. By involving stakeholders and users feedback was generated throughout the process to improve the chatbot functionality.

The chatbot was designed to be user-friendly and engaging, that informed users about Gender bias.

SYNOPSIS

Gender bias in machine translation is a critical issue that doesn’t receive sufficient attention in my opinion, and the project aims to address this gap. The project aims to address this issue of gender bias in machine translation since most translation machine users are not aware of this bias in their translater text. By creating a chatbot prototype it is aimed to inform those users about this problem. The chatbot is designed to create awareness, inform and educate users about gender bias in machine translation and how it can perpetuate gender stereotypes.

The need to address gender bias in machine translation is critical as it can impact the perception of gender roles and reinforce negative stereotypes (Savoldi et al., 2021). Therefore, it is important to equip users of these tools with the knowledge and awareness to identify and mitigate gender bias. The project’s target audience is individuals who use machine translation tools, particularly students who are likely to encounter the issue in their academic work.

To create the chatbot prototype, the project used Voice Flow. By involving stakeholders and users feedback was generated throughout the process to improve the chatbot functionality.

The chatbot was designed to be user-friendly and engaging, that informed users about Gender bias.

SUBSTANTIATION

Gender bias is a pervasive problem in the translation world, especially when using translation machines. Translation machines rely on machine learning algorithms to generate translations, and these algorithms are often trained on large amounts of pre-existing translations. However, these translations can themselves be biased and perpetuate gender stereotypes, which can lead to gender bias in automatic translation (Pinnis, 2020).

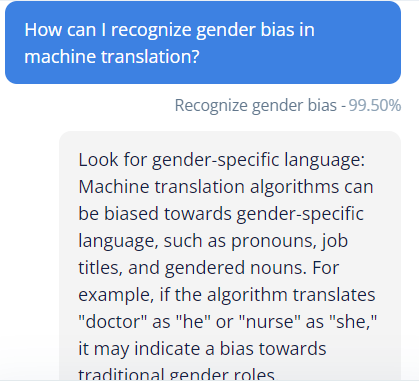

One of the most common forms of gender bias in machine translation is the use of gender language. For example, some languages have gender-specific nouns, assigning a masculine or feminine gender to certain words. Translation machines can easily reinforce these gender-specific language norms by consistently translating words in a gender-specific way, even when the source text is gender-neutral.

Another form of gender bias in machine translation is the use of gender-specific pronouns. In some languages, pronouns are gender-specific, and translation machines may use the wrong pronoun based on the perceived gender of the subject of the sentence. This can lead to confusion and even offense, especially when translating content related to gender identity or sexual orientation (Chunyu, 2002).

Translation machines can also perpetuate gender stereotypes by using gender language to describe certain occupations or roles. For example, certain occupations in English are traditionally associated with men, such as “firefighter” or “policeman,” while others are associated with women, such as “nurse” or “teacher.” Translation machines may unintentionally reinforce these stereotypes by using gender language when translating texts about these occupations (Marcelo O. R. Prates, 2019).

In short, gender bias in that needs to be addressed. Translation companies and developers should ensure that their machine learning algorithms are trained for gender-neutral translation and adopt inclusive language policies. In addition, end users should be aware of the potential gender bias in machine translations and take steps to check the accuracy of these translations before they are used (Savoldi et al., 2021). Ultimately, it is up to everyone in the translation industry to work toward more inclusive and unbiased translations.

PROJECT

During my project, I initially planned to work on recommendation systems but my interest was piqued when I attended the Chatbot workshop. I became fascinated by the potential of chatbots to educate people on gender bias in machine learning in an interactive manner. To determine the best chatbot program for my project, I experimented with four different platforms, namely VoiceFlow, Crisp, chatbot, and Dialogflow. After creating a few basic chat flows on each of them, I ultimately chose VoiceFlow due to its user-friendly interface, expansive capabilities, and appealing aesthetic.

The primary objective of my project is to create an engaging chatbot that educates users about gender bias in machine translation. To achieve this, my target audience is students who frequently use machine translation throughout their academic career but may not be aware of the potential for gender bias. I believe that students are open-minded and eager to learn about this important topic.

ITERATIONS

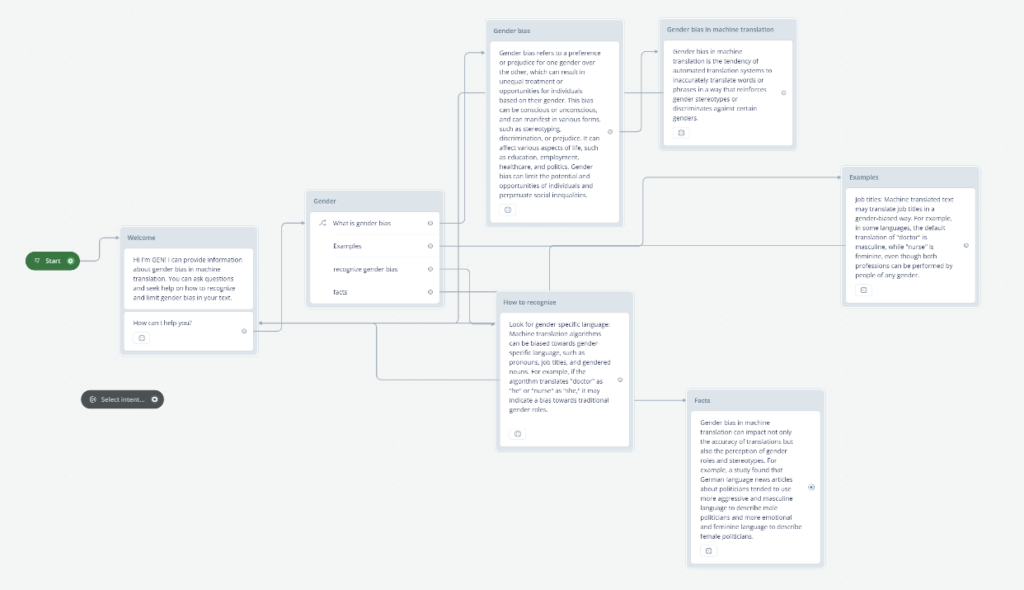

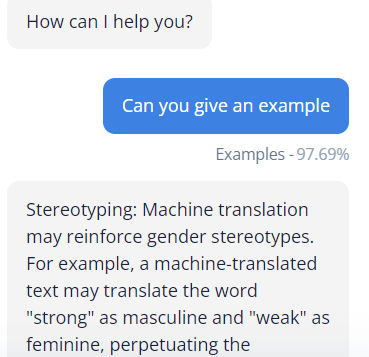

First iteration – Story line and first flow

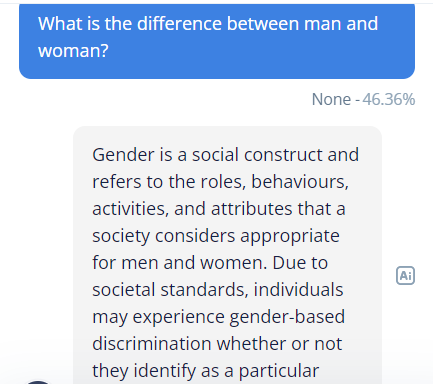

Using a flowchart, the flow for the conversation for the prototype was visualized. After this visualization multiple chatbots were tested. In voiceflow the first flow was created. AI was enabled during the first iteration. When the chatbot didn’t have a perfect match it generated an outcome using AI.

Insights

- AI gave most of the answers but therefor gave answers and reacted to questions that where outside of the scope.

- Testers missed a fun element or something that would be more engaging

- Testers had no idea on the problem of gender bias in machine translation. They liked that the chatbot gave them new insights.

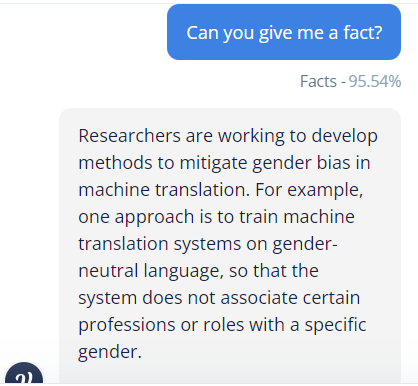

Second iteration – Story flow improvements

During the first iteration AI answered most of the questions. The focus of the second interation was improving the answers and most of all recognizing the users answers. After adding more input and training the chatbot I observed that all answers were given at once. If the user asked for tips, it gave 8 tips at the same time, after some tweeking it gives one answer and when you ask again or ask for another tip it will give a new one. In this way the user is not overloaded with information and gets short answers.

Insights

- Voice flow is really sensitive, if it is not 100% a match it doesn’t recognize the question.

- AI was turned of to keep better track on the conversation

- When giving one answer at the time, users will not be overloaded with information

- Users are missing a fun element, something like a quiz.

Third iteration – Interaction improvements

During the third and last iteration the focus was on creating it was possible to ask questions AI answered most of the questions. The focus of the second interation was improving the answers and most of all recognizing the users answers. After adding more input and training the chatbot I observed that all answers were given at once. If the user asked for tips, it gave 8 tips at the same time, after some tweeking it gives one answer and when you ask again or ask for another tip it will give a new one. In this way the user is not overloaded with information and gets short answers.

Insights

- Voice flow is really gives a lot of possibilities, and recognizes a lot of questions.

- AI can give helpfull information when the matching scores low.

- When the same question is asked it can give different answers.

- Users can test if they recognize gender bias with some small examples.

- Testers liked the answers, and thought the chatbot was a good addition to a machine translator.

- Testers liked the balance between engaging and formality of the chatbot.

CONCLUSION

This chatbot was developed to help create awareness and inform machine translator users by using Voiceflow. Based on the survey results, it can be said that chatbots help create awareness by users, almost all of the participants where not aware of gender bias in machine translation before conducting in this research. In most cases, they found the chatbot engaging, informative and helpful. There is room for improvement of the prototype. One improvement that would be really beneficial would be options in the form of buttons, but Voiceflow doesn’t has the option at this moment. The chatbot would be able to easier understand and have better interactions. The interface needs to be less confusing and it would make the conversation easier. A second improvement would be to give feedback, so users can give feedback on answers but can also give ideas for extra information.

LITERATURE

- Chunyu, K., Haihua, P., & Webster, J. J. (2002). Example-based machine translation: A new paradigm.

- Marcelo O. R. Prates, P. H. A. L. C. L. (2019). Assessing gender bias in machine translation: a case study with Google Translate. https://doi.org/https://doi.org/10.1007/s00521-019-04144-6

- Pinnis, M., & Šauperl, A. (2020). Translating gender: Machine translation and gender-neutral language. Journal of Language and Sexuality. 147-174. https://doi.org/10.1075/jls.20007

- Savoldi, B., Gaido, M., Bentivogli, L., Negri, M., & Turchi, M. (2021). Gender Bias in Machine Translation. Transactions of the Association for Computational Linguistics, 9, 845-874. https://doi.org/10.1162/tacl_a_00401

Read more "The gender bias in translation machines"