Premise

Creating a responsive chatbot against loneliness among students.

Synopsis

The lockdown has continued to isolate students from their old social life. The mental health state of many is dwindling significantly compared to pre-corona times. When we are unable to meet people, what do we do?

With that as a leading question, a chatbot was created to fight loneliness among younger people. What started out as a narrow chatbot with clickable elements turned into a responsive chatbot, able to interpret user utterances. Future recommendations include the use of sentiment analysis based on Azure and more training based on the user utterances.

Chatbots without enough learning data are rather narrow. Therefore, it is essential to keep training the chatbot with the utterances that users generate. Additionally, if the chatbot is able to interpret the sentiment of the user, it could respond accordingly. Thus, increasing the responsiveness of the chatbot drastically.

The Problem

In the current pandemic the worries about the mental wellbeing of young adults is an almost daily topic. However, nothing seems to be done about their isolated state. Social anxiety is part of the problem for people during the pandemic (Panchal et al, 2021).

Especially among the student demographic, the consequences of a lack of social life is felt (Krendl, 2021; Lippke, Fischer & Ratz, 2021; Padmanabhanunni, 2021). As a student myself I am feeling the consequences as well. With no social outlet other than my housemates, my social life has come to a stop.

The Approach

The initial idea for the prototype was a chatbot created with IBM Watson. The user would be able to have natural conversations with the chatbot in order to feel less lonely. The chatbot would be less chatbot and more of a listening ear for socially isolated individuals.

The first conversation flow was meant to divide the conversation in three distinct emotional states, bad, neutral and positive. The chatbot would then be able to discuss the reason for the emotional state with the individual. However, it quickly became apparent that IBM Watson did not fit the goal of the chatbot.

It was then decided to create the chatbot through the Bot Framework Composer. The composer offers more options and has pre-trained models, which is convenient for the chatbot. Additionally, the Composer allows for an open conversation design.

Later in the process, both Azure Cognitive Services and Tensorflow were researched to implement sentiment analysis in the chatbot. Thus, improving the ability of the chatbot to interpret user utterances.

1st Iteration User Testing

General feedback was that the chatbot did not feel like a “chatbot against loneliness”, but rather as a social hub. Additionally, the testers noted the static feeling of the conversation, since the options are clickable and not typable. The primary goal of the second iteration is to create a less restrictive chatbot. A chatbot that can understand the user and where the user is able to actually type messages instead of static clicking.

The Design Process Second Iteration

The second iteration uses the Bot Framework Composer, which is a visual development environment developed by Microsoft. It allows the developer to create intricate bots with various goals. It is important to note down exactly what we want to accomplish and what the bot should be able to do. Some rough guidelines for the design process:

- What utterances are common in human conversations?

- How do we fight loneliness? Should we create a bot that feels like it is listening to the person? Or should the bot talk in a more proactive manner, maybe even give suggestions to the person to battle their own loneliness.

Two main concepts that should be identified straight away are Intent and Utterance. Intent is identified as the intention that a user has when saying/typing something. The utterance is the entity of text/speech that the user writes/says. The bot extracts the intent from the utterance by analysing the text and searching for certain trigger words. The intent(s) drive the conversation forward, as it provides the bot with the knowledge of what the user is attempting to achieve.

The primary goal for the second iteration in Composer was to ask the user for their name and let the bot greet them with their name. It was quite difficult to make it work as some limitations are in place.

When the bot asked “What is your name?”, the expected utterance that acted as training data were along the lines of “My name is X” Or “I am X”. The Composer works with LUIS, a language understanding module. The convenient thing about LUIS is that it is trained on a larger scale and can thus easily work with a smaller amount of expected utterances. However, it was difficult to extract the name of the user, as a response along the lines of “My name is X” would see the Bot thinking that the name of the user was My Name Is X, instead of just X. Documentation on the possibilities of the Composer are rather constrictive and after much trying, the bot is now able to extract the name from an utterance.

Another hiccup was with the age. Similar problems arose as with the age, however the solution that worked with the extraction of the name, did not work with the extraction of the age of the user.

[aesop_image img=”https://cmdstudio.nl/mddd/wp-content/uploads/sites/58/2021/06/ChatBotGif.gif” panorama=”off” align=”center” lightbox=”on” captionsrc=”custom” captionposition=”left” revealfx=”off” overlay_revealfx=”off”]

2nd Iteration User Testing

The user testing with the second iteration was more positive than the first iteration. The users enjoyed the way in which they communicated with the chatbot. It also felt familiar to normally chat with the chatbot, in contrast with the clickable elements from the first iteration.

Increased adaptiveness and responsiveness of the chatbot was a feedback point of both user testings. As such, the final iteration is an attempt on the integration of sentiment analysis into the chatbot.

[aesop_image img=”https://cmdstudio.nl/mddd/wp-content/uploads/sites/58/2021/06/JoesTest.jpeg” panorama=”off” align=”center” lightbox=”on” captionsrc=”custom” captionposition=”left” revealfx=”off” overlay_revealfx=”off”]

Final Iteration

The final iteration of the prototype was aimed at improving the responsiveness of the chatbot. For this reason, research was done into both Tensorflow and Azure Cognitive Services. Both cloud services provide excellent sentiment analysis.

However, Azure Cognitive Services proves to be the most beneficial for the prototype. The reason for this is that Tensorflow can only interpret the sentiment of a given paragraph. Additionally, the Tensorflow model needs training data from the creator of the model. As the research was not as broad scale, it would be more efficient to have access to a model with pre-trained models.

Azure Cognitive Service allows for better extraction of sentiment analysis through an API call. The API call is rather sophisticated as it also provides opinion mining. Which means that the model is able to see what the subject is of an opinion. E.g. from an utterance such as, “The pizza was great.” Azure is able to extract that the user’s opinion was regarding the “pizza” and that the opinion was “great”, which would return a positive sentiment.

[aesop_image img=”https://cmdstudio.nl/mddd/wp-content/uploads/sites/58/2021/06/SentimentGif.gif” panorama=”off” align=”center” lightbox=”on” captionsrc=”custom” captionposition=”left” revealfx=”off” overlay_revealfx=”off”]

Reflection

The project was fun to create. IBM Watson was easy to use and understand. However, it was rather restrictive in its free version capabilities. Luckily, the Bot Framework Composer offered many more possibilities.

Additionally, it was difficult to find solutions to certain problems. Websites such as Stackoverflow are normally of high value for developers. A similar website for Composer does not exist. Which was frustrating, as the solution for the extraction of the user’s age was not found sadly.

Another problem was the connection between the Azure Cognitive Services sentiment analysis API and the Composer. The API call needs a Python environment to properly send the request and receive the response. However, the Composer does not provide the adaptability for such a call, as only simple API calls are possible through the Composer. Rasa would be an interesting point of research as well. However, Rasa does need a lot of training data in comparison with Azure.

Lastly, more research has to be done on the extraction of sentiment from the chat logs. The Azure API call splits paragraphs up at the “.” mark. However, the question has to be asked, what part of the paragraph is most important for the bot to respond to? Is a positive sentiment more important than a negative sentiment?

Recommendation/Conclusion

Future recommendations for improved iterations are to integrate sentiment analysis from Azure if possible. The chatbot will be more adaptive and is able to both ask and interpret open ended questions. While the chatbot is able to acknowledge the user after asking for their name, it is not able to ask how the day of the user was. When fighting loneliness, it is important to create a chatbot that is able to ask “how was your day?”. Additionally, through sentiment analysis and perhaps even opinion mining, the chatbot is able to adapt to a user’s mood and ask questions accordingly.

Furthermore, the chat logs of future iterations should be used to extract utterances of users that are not yet recognized by the model. E.g. if people often ask “how was your day?” to the chatbot, the designer should provide such an intent for the conversation flow. Thus, improving the adaptiveness of the chatbot.

References

Hudson, J., Ungar, R., Albright, L., Tkatch, R., Schaeffer, J., & Wicker, E. R. (2020). Robotic Pet Use Among Community-Dwelling Older Adults. The Journals of Gerontology: Series B, 75(9), 2018-2028.

Krendl, A. C. (2021). Changes in stress predict worse mental health outcomes for college students than does loneliness; evidence from the COVID-19 pandemic. Journal of American College Health, 1-4.

Lippke, S., Fischer, M. A., & Ratz, T. (2021). Physical activity, loneliness, and meaning of friendship in young individuals–a mixed-methods investigation prior to and during the COVID-19 pandemic with three cross-sectional studies. Frontiers in Psychology, 12, 146

Padmanabhanunni, A., & Pretorius, T. B. (2021). The unbearable loneliness of COVID-19: COVID-19-related correlates of loneliness in South Africa in young adults. Psychiatry research, 296, 113658.

Panchal, N., Kamal, R., Orgera, K., Cox, C., Garfield, R., Hamel, L., & Chidambaram, P. (2020). The implications of COVID-19 for mental health and substance use. Kaiser family foundation.

Vasileiou, K., Barnett, J., Barreto, M., Vines, J., Atkinson, M., Long, K., … & Wilson, M. (2019). Coping with loneliness at University: A qualitative interview study with students in the UK. Mental Health & Prevention, 13, 21-30.

Read more "Creating a responsive chatbot against loneliness among students."

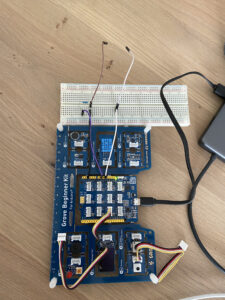

This course started with a few Arduino workshops which enthused me to try to work with Arduino. At first, I did some research into Arduino compatible sensors. I inspired my research on the

This course started with a few Arduino workshops which enthused me to try to work with Arduino. At first, I did some research into Arduino compatible sensors. I inspired my research on the