Premise

Exploring techniques that can ease calorie tracking and optimize the quality of nutritional data.

Synopsis

During other courses I already did some research into dietary applications and their pros and cons. The three most common ways to collect data are manual input, data collection by sensors and data collection by cameras. Manual input is a threshold for users to maintain their data collection, automated technologies such as smartwatches and barcode scanning can help users with this task. During this research I want to discover which technologies are already used and which technologies have the potential to take dietary data collection to a next level.

Background

Obesity numbers have tripled since 1975. 1,9 billion adults and 340 million children and adolescents were overweight in 2016 (World Health Organization, 2020). Obesity is a serious problem; it is associated with mental health problems and reduced quality of life. This includes diabetes, heart disease, stroke and several types of cancer (Adult Obesity, 2020). Behavior usually plays an important role in obesity (Jebb, 2004). Treatment for obesity is therefore often focused on food intake and physical activity. Maintaining a behavioral change is often difficult. The adherence of a diet to maintain weight loss is crucial. New technologies that can help users adhere a diet can make a real change (Thomas et al., 2014). There are applications that help users with calorie tracking but users have to give input themselves which is a threshold to maintain calorie tracking. Therefore, I want to discover technologies that can help with calorie tracking.

Experiments

Arduino

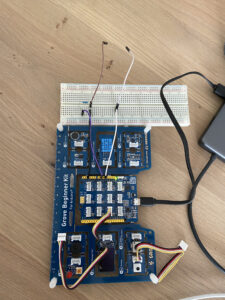

This course started with a few Arduino workshops which enthused me to try to work with Arduino. At first, I did some research into Arduino compatible sensors. I inspired my research on the HEALBE GoBe3 device which I already did research on during another course. This device uses a bioelectrical impedance sensor to measure calorie intake. It turns out that bioelectrical impedance sensors are quite expensive and that I had to think of another way to measure calorie intake with Arduino. I found this project based on a game of Sures Kumar in which they can measure foods by their unique resistance value. I managed to make it work and measure the resistance which I could link to different fruits. However, the results were not very promising. The resistance values of the fruits would differ from time-to-time due to temperature differences and ripeness. Secondly, there was an overlap of values by different fruits which means that the system could not detect the right fruit. This means that this method is not suitable for precise food detection. Therefore I, switched to a method I already did some experimenting with.

This course started with a few Arduino workshops which enthused me to try to work with Arduino. At first, I did some research into Arduino compatible sensors. I inspired my research on the HEALBE GoBe3 device which I already did research on during another course. This device uses a bioelectrical impedance sensor to measure calorie intake. It turns out that bioelectrical impedance sensors are quite expensive and that I had to think of another way to measure calorie intake with Arduino. I found this project based on a game of Sures Kumar in which they can measure foods by their unique resistance value. I managed to make it work and measure the resistance which I could link to different fruits. However, the results were not very promising. The resistance values of the fruits would differ from time-to-time due to temperature differences and ripeness. Secondly, there was an overlap of values by different fruits which means that the system could not detect the right fruit. This means that this method is not suitable for precise food detection. Therefore I, switched to a method I already did some experimenting with.

[aesop_video src=”self” hosted=”https://cmdstudio.nl/mddd/wp-content/uploads/sites/58/2021/06/Schermopname-2021-06-13-om-12.20.45.mp4″ width=”content” align=”center” disable_for_mobile=”on” loop=”on” controls=”on” mute=”off” autoplay=”off” viewstart=”off” viewend=”off” show_subtitles=”off” revealfx=”off” overlay_revealfx=”off”]

IBM Watson

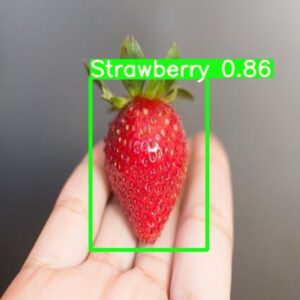

I already did some experimenting with IBM Watson in the past. Therefore, I knew that image recognition is a technology that can recognize foods. During my experiment with IBM Watson, I managed to use the IBM food model. This model could detect images of fruit very well with a confidence score of 90 percent. The disadvantage of using a pre-made model is that you do not have the possibility to change or complement the model with your own data. Therefore, I tried to make my own model that could detect different apple types.The model was trained with 20 apples of each type and 20 negative images of fruits that were not an apple. After the training, the model showed different confidence scores per type of apple. I checked the training images with the lowest confidence scores and noticed why they did not achieve the same score as the rest. The images that scored the lowest were not present at the same amount as the images that scored a high score. This made me realize that it is important to be very cautious choosing which image is suitable for the training data. Another thing I realized was the limitation of a system such as IBM Watson. Training customization and free implementation options were limited. The challenge is to dive into image recognition technologies that could be implemented into python.

OpenCV

The first technology that caught my attention was OpenCV. They provide computer vision libraries and tools to execute real time computer vision. I completed a tutorial in which the computer could recognize a stop sign in a picture. This method used a single image and a pre-trained Haar classifier. During my search for a tutorial to make a custom Haar classifier I found a tutorial that could make realtime object detections. This would mean that users only have to open their camera and immediately see the object detection. This caught my attention and challenged me to follow a new tutorial which would teach me to create a real time object detection model. This was pretty easy; the model used the coco dataset. This dataset is known as one of the best image datasets available. It has around 200.000 labeled images and 80 different categories. I was surprised how easy it was to install this model and detect objects in real time. It could detect 80 different objects including persons, cellphones, bananas and apples. Although this dataset worked really well it was not suitable for my goal. It could not detect any fruits other than bananas and apples. A solution for this problem was the creation of my own coco dataset that would be focused on fruits. Unfortunately, due to my limited programming knowledge and regular errors I could not manage to make this work. Therefore, I tried to find a tool that could help me to make my own custom dataset.

[aesop_video src=”self” hosted=”https://cmdstudio.nl/mddd/wp-content/uploads/sites/58/2021/06/Schermopname-2021-06-13-om-12.23.56.mp4″ align=”center” disable_for_mobile=”on” loop=”on” controls=”on” mute=”off” autoplay=”off” viewstart=”off” viewend=”off” show_subtitles=”off” revealfx=”off” overlay_revealfx=”off”]

Roboflow and Yolov5

First iteration

After some research I found a tutorial that showed me how to make a custom object detection model for multiple objects. This tutorial showed me how to use the YOLOv5 (You Only Look Once) environment to train the model and Roboflow to annotate the training images. Roboflow is a program that makes it possible to annotate every image and export the annotations into the filetype that you need. In this case I exported my file to a YOLOv5 PyTorch file. The annotation process was a lot of work because I had to draw bounding boxes on every picture. At first, I used a dataset from Kaggle. This was a dataset of 90.000 images consisting of 130 different fruits or vegetables. I selected 600 images for 6 different fruits. The images were already cut out but had to be annotated still. The results after training these images were not very good. It could not recognize the images very well. The images below show that the model created wrong bounding boxes and predictions.

[aesop_image img=”https://cmdstudio.nl/mddd/wp-content/uploads/sites/58/2021/06/wrong-results.jpeg” panorama=”on” align=”center” lightbox=”on” captionsrc=”custom” captionposition=”left” revealfx=”off” overlay_revealfx=”off”]

Second iteration

Therefore, I created a new dataset that I collected myself. This time I used a mix between images of fruit with a blank background and images of fruits with a mixed background. This time the results of the training were much better. I used YOLOv5 because this model is easier to implement than YOLOv4 and the results are similar (Nelson, 2021). The image below shows the score of different elements. The most elements show a flattening curve at the top which shows there were enough training iterations. The mAP@0,5 (mean average precision) scored above the 0,5 which is commonly seen as a success (Yohanandan, 2020). Although the training of my model was a success, I still could not manage to connect the model to a camera. This is a disappointment and a challenge in future projects.

[aesop_image img=”https://cmdstudio.nl/mddd/wp-content/uploads/sites/58/2021/06/Good-graph.png” panorama=”on” imgwidth=”content” align=”center” lightbox=”on” captionsrc=”custom” captionposition=”left” revealfx=”off” overlay_revealfx=”off”]

The images above show a wrong and a good prediction. Although the prediction of the lemon is wrong, it is interesting to see that it is recognized for a banana. This is understandable because there are training images of bananas that look similar.

Future Research

Image recognition is a technology that has an enormous potential for food recognition. There are a lot of datasets and models available that are perfectly capable of recognizing fruits. Which would be a very useful addition to dietary applications when linked to a database with the caloric values. The next step would be a model that could recognize sizes or portions of food. This step would improve the quality of dietary intake data.

Conclusion

My search for technologies that could support dietary apps was successful. I enjoyed working with Arduino and came across many amazing projects. However, I learned more during my dive into the different image recognition techniques. I discovered how many different models there are and how they work. My lack of programming knowledge was sometimes frustrating because I could not finish some projects I started with. Nevertheless, the search for solutions or different methods gave me new insights that I otherwise would not have acquired. It would be nice if I could implement some of the techniques, I learned during my graduation project.

References

Adult Obesity. (2020, 17 september). Centers for Disease Control and Prevention. https://www.cdc.gov/obesity/adult/causes.html#:%7E:text=Obesity%20is%20 serious%20because%20it,and%20some%20types%20of%20cancer.

Jebb, S. (2004). Obesity: causes and consequences. Women’s Health Medicine, 1(1), 38–41. https://doi.org/10.1383/wohm.1.1.38.55418

Nelson, J. (2021, 4 maart). Responding to the Controversy about YOLOv5. Roboflow Blog. https://blog.roboflow.com/yolov4-versus-yolov5/

Thomas, D. M., Martin, C. K., Redman, L. M., Heymsfield, S. B., Lettieri, S., Levine, J. A., Bouchard, C., & Schoeller, D. A. (2014). Effect of dietary adherence on the body weight plateau: a mathematical model incorporating intermittent compliance with energy intake prescription. The American Journal of Clinical Nutrition, 100(3), 787–795. https://doi.org/10.3945/ajcn.113.079822

World Health Organization. (2020a, 1 april). Obesity and overweight. https://www.who.int/news-room/fact-sheets/detail/obesity-and-overweight

Yohanandan, S. (2020, 24 juni). mAP (mean Average Precision) might confuse you! – Towards Data Science. Medium. https://towardsdatascience.com/map-mean-average-precision-might-confuse-you-5956f1bfa9e2