[aesop_quote type=”block” background=”#ffffff” text=”#000000″ align=”left” size=”1″ quote=”The human face – in response is and in movement, at the moment of death as in life, in silence and in speech, in actuality or as represented in art or recorded by a camera – is a commanding, complicated and at times confusing source of information” cite=”P. Ekman & W. Friesen – 1972″ parallax=”off” direction=”left” revealfx=”off”]

Premise

Detecting human emotions using deep learning techniques and applying it music therapy.

SYNOPSIS

Utilizing technology to solve actual world problems, and facilitate people’s lives has always been an interest of mine. Especially when the world of computer science and IT intersect with social fields and art. Yet, my previous studies and job were purely technical and very stressful. That being said, I personally encountered many stress and emotional control problems while working in oil and gas projects. Music is one form of art that I used to go to in order to release stress and recharge positive energy back. That’s when I started experimenting with new forms of technologies and combine it with music therapy. In my process, I aimed to learn new technical concepts, draft iterations of my prototype and test on myself and other individuals.

PREFACE

People from the mediterranean are famous for being overly expressive of their emotions. Lebanon, where I come from, is a small beautiful country on that sea but plagued with economic problems. And those problems amplifies the inability of individuals to control those emotions. Growing up in there, I was no exception to that rule as the economic crisis has affected my upbringing on the emotional level. Yet, the problem with me did not stop at my teenage and undergraduate years, it has accompanied me in my working professional life. During that time, music was the only way for me to control those emotions and gain relief and calmness.

Ekman (2004) identified the six basic emotions of humans as anger, fear, disgust, surprise, sadness and enjoyment. They easily recognizable as they manifest on humans facial expressions. Emotions can also affect our physical, mental and social well-being. Anger for example, is an emotion that varies in intensity and duration, but often recognized as a percipient for legal, familial and health problems (Del Vecchio & O’Leary, 2004). Happiness on the other hand is quite the opposite in terms of the effects. A study from Lyubomirsky et al. (2005) showed that happiness is strongly associated with positive behavior outcomes. Their findings suggested that the well-being of individuals is highly correlated with happy emotions. Music is a form of art that affects emotional experience (Juslin, 2019). It has the ability ability to evoke powerful positive emotional response that alters moods and relief stress (Heshmat, 2o19). A study on children from Choi et al. (2010) showed that music therapy can reduce aggressive behavior and improve self-esteem. My aim in this project is to create a prototype that plays music based on emotional responses from users. And I am planning on doing so without the use of any physical device that monitor heart rates or other biological measurements. But rather by using computer vision and deep learning.

PROTOTYPING:

PREPARATIONS:

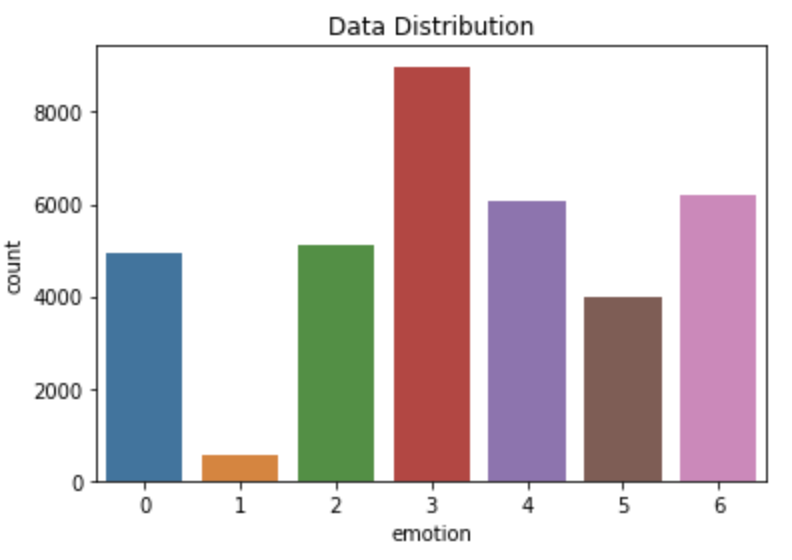

The first step to achieve a workable prototype was to learn how the actual technology works. Taking advantage of my technical background, and my fascination with technology I dove deeper into the topic of Deep Learning. Deep learning is a subset of machine learning which attempts to extract high-level abstractions in data through the usage of hierarchical architecture (Guo et al., 2016). Deep learning methods have shown to outperform previous state of the art techniques in different sectors most notably image recognitions and computer vision problems (Deng, 2014 ; Voulodimos et al., 2018). Since the topic was completely new to me, the learning process was a combination of courses on DataCamp, and references from two books. The first one is F. Chollet (2017) and the second one is by Di et al. (2018). After a week of tutorials and experimentations with various dataset I felt ready to start working on my actual goal. I went on to collect image datasets from multiple sources. In my initial search, I encountered many database that requires pre-approved access to get the images, but I found one posted on Kaggle in 2013. The dataset contained 35887 images of people’s faces with different emotions shaped in a 48×48 pixel format. The images were categorized into seven emotions: anger, disgust, fear, happiness, neutral, sadness and surprise. By examining the data distribution in our image set, I noticed an immediate imbalance between categories. Some emotion classes have more images than others. Based on the reviews I did, this imbalance might affect the outcome of my model. after examination I split the dataset programmatically in Python into training set (90%) and validation set (10%). Where the former is used to train the model and the latter is for evaluation the performance.

Also, I explored the images in the datasets.

FIRST ITERATION:

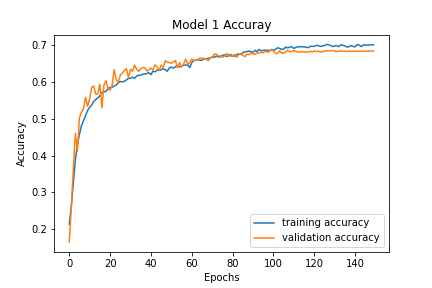

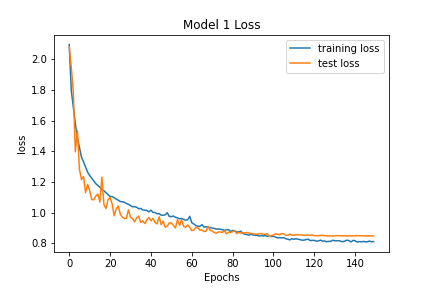

Now that my dataset is ready, I went on to create a Convolutional Neural Network (CNN) in Python using Tensorflow Keras library. A CNN is an artificial neural network that imitates how the visual cortex of the brain process and recognizes images (Kim, 2017). It consist of a set of consecutive convolution and pooling layers. Where the former apply filters to the images to detect patterns and edge, and the latter reduces the dimensions of images. On my first few attempts, my model performed really bad, and execution was taking so much time. At that point I went into a literature review on how to optimize a deep learning model performance. Among my findings, I came across new important parameters such as dropouts, call backs and most importantly a technique called data augmentation. Data augmentation happens programmatically where images are artificially manipulated and cloned. Example, images are zoomed in and out, horizontally flipped and so on. Doing these adjustments improved the outcome of my model drastically. And deepened my knowledge in controlling the performance of an artificial neural network. My review also taught me how to evaluate properly the performance of a neural network model. Two important factors to measure, they are validation accuracy and the validation loss. In short, if my validation accuracy is high and validation loss is low, it’s a really GOOD SIGN!! if the opposite, then it’s a really BAD SIGN!!

Now that my model is ready and reached an acceptable number, it is time to test it in a live environment. The testing was carried out using OpenCV. An open source library for computer mainly for Python used for various purposes such as object detection. I had to go through a couple of tutorials on Youtube to get the basic intuition on how OpenCV works. And used a face detection library that recognizes a face in a video.

Generally, the outcome of the first attempt looks good. I went on to ask my colleagues to test it.

Note: All participants granted me permission to post their recordings of the test on this blog.

Participant 1

Participant 2

Participant 3

Participant 4

Generally, the model is performing well. But it is having trouble detecting sad emotions on males, and angry emotions for females. In my assumption, this is due to the dataset itself having imbalance and low quality of images

SECOND ITERATION:

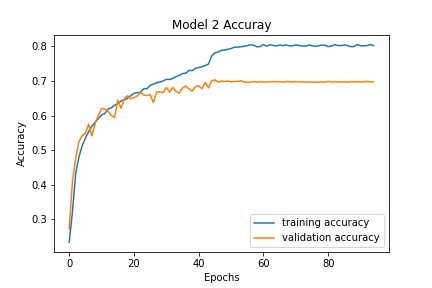

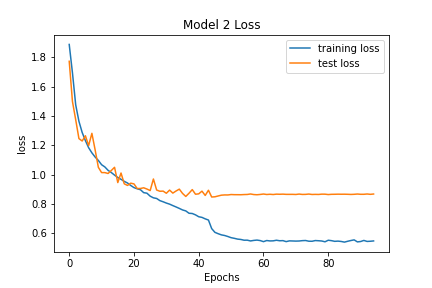

In order to enhance the performance of the model, I went on to do more research on improving the performance of my model and workaround solutions. I came across batch normalizations. It is a technique proposed by Ioffe and Szegedy (2015) that stabilizes the performance of a neural network by subtracting the batch mean from every activation in a layer and divide it by the batch standard deviation. Results shown in the figures below shows a slight increase in the validation accuracy and decrease in the loss.

Before testing the model in OpenCV, I scrapped data from Spotify Web API. Unfortunately, the API provides a Beta version for programmatic music streaming, and it is very limited in use. So I fetched data of recommended songs from the API based on genre. One more issue I came across is how Spotify measures happiness in a song. Very few documentations provides such info. And only one blog on Spotify Developers Community mentions a parameter called Valence. So I chose it to be my parameter when fetching data.

Once data is ready, I created the second iteration with the improved model to demonstrate how music played changes based on facial expressions captured from users. Test was carried out again with users. Although music was not actually played, but a timer was set to change the displayed text on the top left corner of the screen. The music changes based on the valance parameter taken from Spotify. Example, If an individual is experiencing sadness or fear then songs played have high valence and more upbeat to it. The text displayed is the song, artist, duration of the song and the valence level in a song.

Participant 1

Participant 2

Participant 3

Participant 4

REFLECTION

The whole process of completing the prototype with iteration can be summarized in two words: Challenging and rewarding. When I chose to delve into deep learning I took it as a challenge for myself. As the topic is quite new for me. It was a challenge of whether I can learn and cover a proportion of a topic that is quite new in the technology industry in a short period of time. Going through books, journal articles and tutorials. Exploring libraries such as Tensforflow Keras and OpenCV. Reignited my passion to explore new programing techniques. The rewarding part came when I applied these new skills to problems that I personally encounter in life. Something I never had the chance to do in previous job as I was limited by time and resources.

CONCLUSION

The proposed prototype is a suggestion to look at emotional distress differently. As opposed to other approaches, it is not meant to constantly monitor users emotions. But rather react to them. Also, many improvements can be made on it, such as the quality of images and the actual content of the image. As seen earlier, in certain emotions were detected on certain individuals better than others. This is due to imbalance in the training dataset found. Another important improvement to be considered is the frequency of camera usage. As some testers did not prefer their camera to be on the whole time, but rather going on when a song is about to be finished.

REFERENCES

Del Vecchio, T., & O’Leary, K. D. (2004). Effectiveness of anger treatments for specific anger problems: A meta-analytic review. Clinical Psychology Review, 24(1), 15–34. https://doi.org/10.1016/j.cpr.2003.09.006

Lyubomirsky, S., King, L., & Diener, E. (2005). The Benefits of Frequent Positive Affect: Does Happiness Lead to Success? Psychological Bulletin, 131(6), 803–855. https://doi.org/10.1037/0033-2909.131.6.803

Ekman, P. (2004). Emotions revealed. BMJ, 328(Suppl S5), 0405184. https://doi.org/10.1136/sbmj.0405184

Juslin, P. N. (2019). Musical Emotions Explained (Illustrated ed.). Oxford University Press.

Heshmat, H. (2019). Music, Emotions and Well-being. Psychology Today. https://www.psychologytoday.com/us/blog/science-choice/201908/music-emotion-and-well-being

Choi, A.-N., Lee, M. S., & Lee, J.-S. (2010). Group Music Intervention Reduces Aggression and Improves Self-Esteem in Children with Highly Aggressive Behavior: A Pilot Controlled Trial. Evidence-Based Complementary and Alternative Medicine, 7(2), 213–217. https://doi.org/10.1093/ecam/nem182

Guo, Y., Liu, Y., Oerlemans, A., Lao, S., Wu, S., & Lew, M. S. (2016). Deep learning for visual understanding: A review. Neurocomputing, 187, 27–48. https://doi.org/10.1016/j.neucom.2015.09.116

Deng, L. (2014). A tutorial survey of architectures, algorithms, and applications for deep learning. APSIPA Transactions on Signal and Information Processing, 3, 1. https://doi.org/10.1017/atsip.2013.9

Voulodimos, A., Doulamis, N., Doulamis, A., & Protopapadakis, E. (2018). Deep Learning for Computer Vision: A Brief Review. Computational Intelligence and Neuroscience, 2018, 1–13. https://doi.org/10.1155/2018/7068349

Di, W., Bhardwaj, A., & Wei, J. (2018). Deep Learning Essentials: Your hands-on guide to the fundamentals of deep learning and neural network modeling. Packt Publishing.

Chollet, F. (2017). Deep Learning with Python (1st ed.). Manning Publications.

Kim, P. (2017). Convolutional Neural Network. MATLAB Deep Learning, 121–147. https://doi.org/10.1007/978-1-4842-2845-6_6

Ioffe, S., & Szegedy, C. (2015, June). Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning (pp. 448-456). PMLR. arXiv preprint arXiv:1502.03167