“Technology will never let you feel alone”

PREMISE

Blind people have a lot of tension in their bodies meanwhile they want to stay healthy and safe therefore providing something with a guided voice could meet their needs.

SYNOPSIS

In 2015, I was almost going to lose my eyes and become visually impaired because of an accident. Contact lenses burnt my retina. That night I was in the hospital and my retina was damaged. That was the worst day of my life but luckily it was fully recovered, however, this experience made me realize how life could be without seeing. Everyone can lose their eyes in a sudden accident. I become more empathetic to blind people and want to underline the needs of blind people. Therefore, I have decided to dive into human pose estimation. It is an interesting area that serves a lot for human problems such as people who need personalized coaching. For instance, blind people can benefit from human pose estimation technology. I would like to try and see how this technology makes blind people’s lives different.

What could make it different?

Background

It is an advanced human–computer interaction that provides an opportunity in the context of personalized fitness and physical therapy. This enables several estimates, including the body’s position, such as lying down or stretching, its location within a scene, its movement and even the ability to assume the activity a body is performing, such as yoga or dancing.

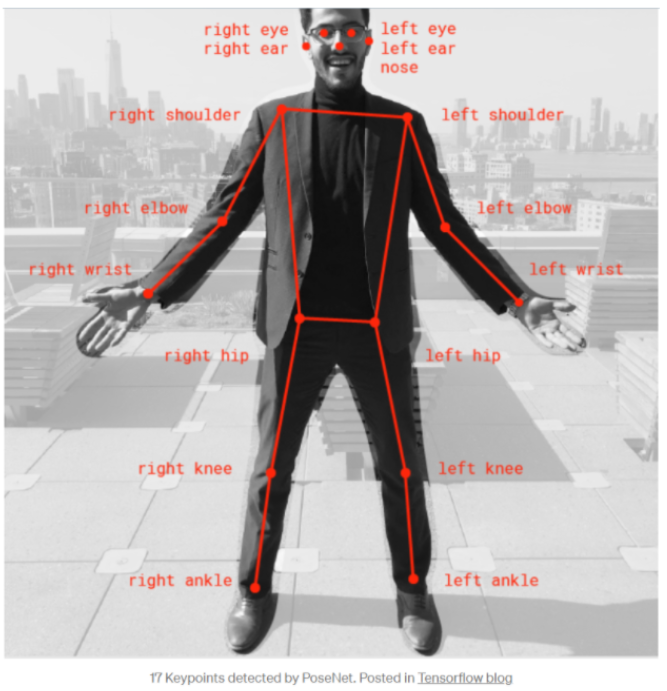

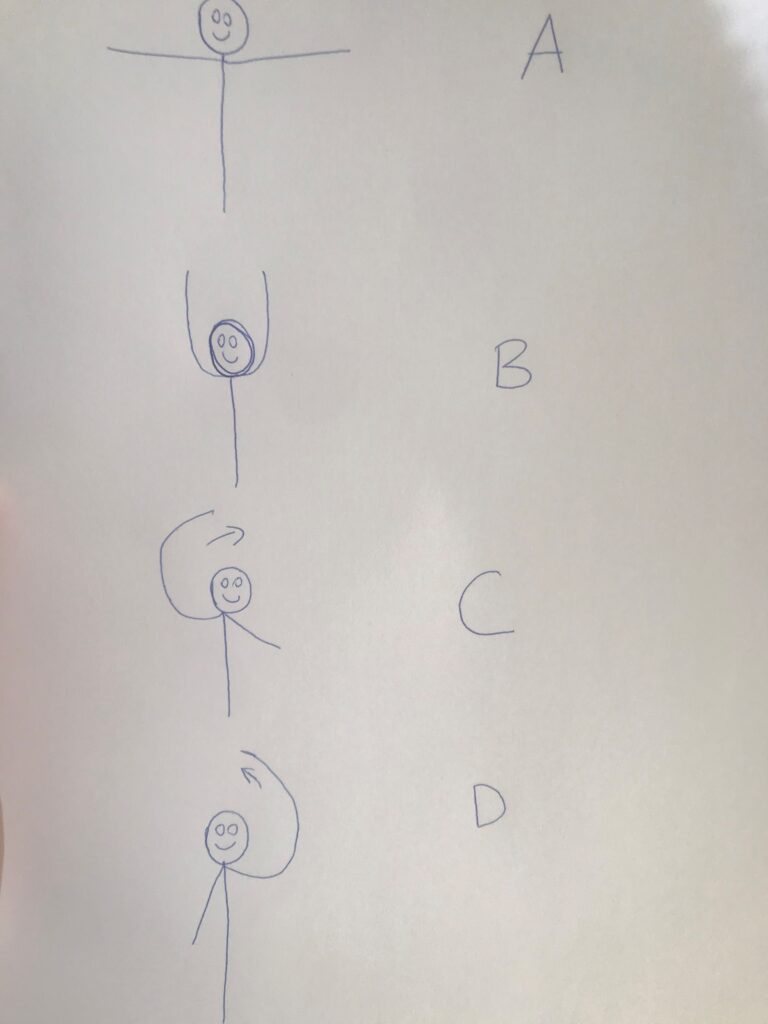

Human pose estimation can be done with PoseNet. There are 17 key points that represent major joints like elbows, knees or wrists shown in the below picture.

Problem Space

According to a global study in 2020, 49.1 million people are blind, 221.4 million people have moderate visual impairment, 33.6 million people have severe visual impairment out of 7.79 billion world population (Bourne et al., 2020). It is not easy to neglect this disability as a vast amount of people are suffering from it. The main problem for their health is that blind people tend to gain more weight compared to people who can see properly because of their lack of movement. A study (Bozkir, Özer, & Pehlivan, 2016) was done in 2016 from Malatya, Turkey with physically disabled people aged between 20 and 65. The relationship between disability and obesity status was meaningful. The prevalence of obesity was found 21.3% in visually impaired people (Bozkir, Özer, & Pehlivan, 2016).

Being blind and fit should be compatible however it is not easy for most blind people to go to sports centres. Also, it is riskier to go out and do exercise when you are not aware of the social distance or the people who are coming close to you during this pandemic outbreak. Another option to stay fit and healthy is the tutorials with audio. Hearing the instructions of an exercise could help to stay fit but what if the poses are not done correctly? Who can constantly guide the blind person? What if a human voice can guide them while doing home exercise? How would that sound to blind people? If someone tells you to raise your arm to the side at shoulder height, you can use your eyes to do it correctly whereas blind people can not do that.

Understanding the Target Group

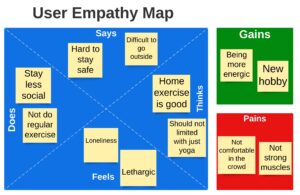

I have participated on an online blind and visually impaired community in social media (Facebook) to get some user insights and asked how did human pose estimation sound to them. I had several attempts but I only had a short interview with 1 person from the online community.

He liked the idea and told that he had learnt Tai Chi several years ago but if he had come across this technology before, he would have been happier. According to the talk, I created a user empathy map. That showed I am on the right track.

Research and Experiment Process

The first phase of my search was to do desk research. Human pose estimation is widely used and trendy in the computer vision field as I have seen lots of tutorials, blogs and websites about it.

Firstly, I had to choose which technology I was going to experience. For the Human Pose Estimation, the most used technique was PoseNet. PoseNet is a deep learning TensorFlow model and I played PoseNet Demos on store.googleapis posenet using Tensorflow.js models.

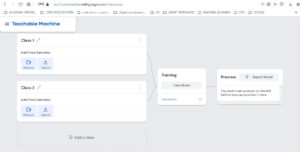

I realized that PoseNet has confidence parameters after the demo I tried “Pose Project” in Teachable Machine. It had more details and stages such as uploading, training and I have learnt the term of epoch. Epoch is one complete pass through the training data. These two experiences made me realize the stages of the pose estimation.

“Collect”, “train” and “load”.

Secondly, I tried to use posenet code in Python but it did not work as I expected. It was slow however I searched for another way to try PoseNet from youtube tutorials. I found a trial that was with Javascript and ML5 library from Tensorflow. ML5 is a machine learning library for the web and no installation required whereas I downloaded lots of libraries in Python but it did not give output. At least, I learnt to try it in the most efficient way with ML5 which can be done in my web browser.

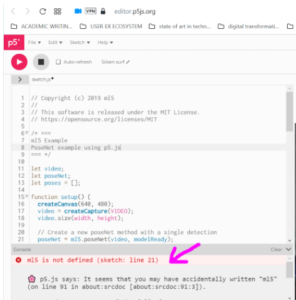

In ML5, I first used https://editor.p5js.org and ran a sample code there but it gave an error. The error was saying “ML5 is not defined”. I had to search for the answer. That was a common error for beginners and had an answer in stackoverflow and github repositories, it was solved. The problem was over when I ran ml5.js on the local server.

After ML5 error, (https://learn.ml5js.org/#/) I got its documentation and I tried the code from there in the web browser to test it locally. Finally it worked out. Now, I can continue to test and train pose estimations with ML5.

The most important insight was to see the human pose estimation without writing pages of codes or additional equipment. ML5 is a very convenient library to make machine learning approachable especially for students ( no cost). No installation is required and ML5 gives access to machine learning algorithms and models in browsers.

Starting My Prototype

Building my actual prototype began with the tutorials from thecodingtrain.com. My web browser was able to detect my joints after downloading the requirements in my computer. The following step was to use some basic yoga poses and train the model. With the help of thecodingtrain.com, I had my codes to collect and save then train and finally load.

3 Stages of Pose Estimation with Sample Codes

The code detected my poses and collected them into a json file. I deliberately made my poses with some margins, I did not stand very still while capturing ( just incase for detecting the movements for other people)

Collection

Iteration 1: Same Poses Again To Increase The Dataset

In my first trial, I realized that it was not enough to train a model and it failed to load but after realizing the problem, I increaased the data set. I have trained enough poses under 4 poses and saved them into a json file with alphabets such as “A” means arms wide open. When the camera saw me with arms wide open, it showed “A” on the screen.

Loading

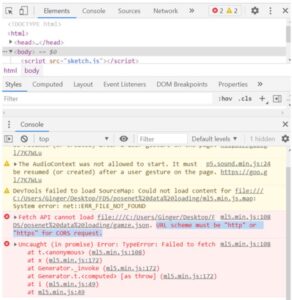

In this stage, I got an error as “URL scheme must be “http” or “https” for CORS request.”

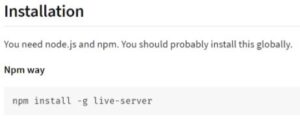

Problem was Javascript code could not fetch the files from the local disk. That meant Live Server can solve it. In order to do it, Npm and node.js were needed. The solution came from installing a Live Server in my posenet folder. That part was hard to overcome and it took time. Finally, Nmp launched a local server ( that was happened by node js) and then the server started to run the HTML properly.

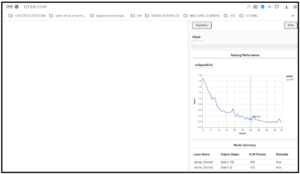

This was my model summary in the picture below. As in the default settings; my training performance was with 50 epoch (An epoch is a single run of the entire data set (Gaillard, 2019) )

With the final part “Deploying” , I have finalized and tested my model but something was missing : “Text to Speech”

Iteration 2: Adding Feature To My Prototype

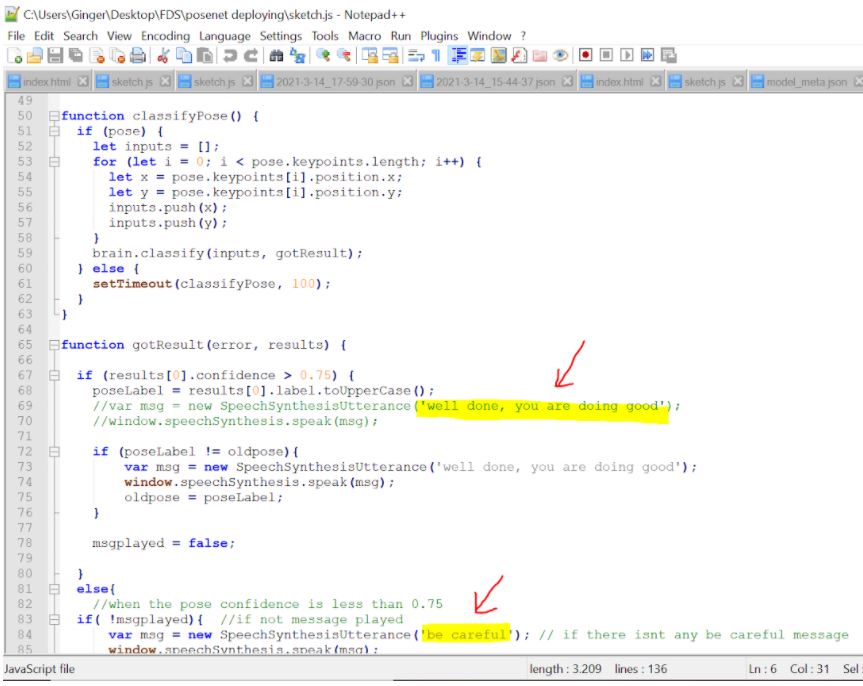

In the final week of my project, I tried to use text to speech which I was willing to do it. The most hardest thing was to arrange the speech when to stop and when to start again. In my first attempt, it never stopped talking then I had to write a condition for it in the javascript.

I used Google text-to-speech in JavaScript. The code was found in stackoverflow.

The Video: My Final Prototype

When it recognizes the correct pose as it is saved, it immediately tells ” welldone” to me.

Reflection & Conclusion

I learnt that everything is figureoutable. I can access every technology because the information is out there. Watching tutorials and getting errors make you learn further. So failure means progress. I am now aware of “how is the kitchen of Pose estimation with PoseNet”. My model works with 75% confidence level however confidence level could be increased from 75% to 85% just to make the model more careful. It should be definitely improved with different types of bodies ( to make the model loading not dependent on one person). It is wise to load the data with different human bodies but it worked okay with my body too. Text to speech worked well too but because of my similar poses saved in the json file, it detected my movements as a “correct pose” while I was switching my pose. This model will work better when there are different joint ventures (lenght, size). Visually impaired people can benefit from this technology in every physical activity. It was a pitty that I could not conduct a focus group or usability test with a blind person. Next step will be how to improve it more for other users to try and put it in my personal website as a case study.

Sources

Bourne, R. R. A., Adelson, J., Flaxman, S., Briant, P., Bottone, M., Vos, T., … Taylor, H. R. (2020, June 10). Global prevalence of blindness and distance and near vision impairment in 2020: Progress towards the Vision 2020 targets and what the future holds. https://iovs.arvojournals.org/article.aspx?articleid=2767477.

Bozkir, Ç., Özer, A., & Pehlivan, E. (2016, September 8). Prevalence of obesity and affecting factors in physically disabled adults living in the city centre of Malatya. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5020754/.

Gaillard, F. (2019). Epoch (machine learning): Radiology Reference Article. Radiopaedia Blog RSS. https://radiopaedia.org/articles/epoch-machine-learning.

Oved, D. (2018, September 27). Real-time human pose estimation in the browser WITH TENSORFLOW.JS. https://medium.com/tensorflow/real-time-human-pose-estimation-in-the-browser-with-tensorflow-js-7dd0bc881cd5.

Shiffman, D. (2020, January 9). ml5.js: Pose Estimation with PoseNet. The Coding Train. https://thecodingtrain.com/learning/ml5/7.1-posenet.html.