PREMISE

Online gender discriminatory speech: See it, Name it, Visualize it.

SYNOPSIS

The majority of women experience gender discriminatory speech in tRddddheir daily lives, both online and offline. Previous research has shown that gender discrimination is not a harmless annoyance, but a serious problem with negative psychological effects and physical disturbances (Swim and Campbell, 2001).

Although blatant sexist comments are now less common than they used to be after years of advocacy by the feminist movement, jokingly, or subtle gender discriminatory speech are still common. I wanted to detect gender discriminatory comments on Twitter by machine learning and using Arduino and a robotic arm to represent them in real time.

This “visualize gender discriminatory speech” project aims to detect gender discriminatory speech and show it can indeed cause harm.

PREFACE

Gender discrimination would be perpetuated and exacerbated by gender discriminatory speech. For example, if children are taught that female are not suited to STEM (Science, technology, engineering, and mathematics), then girls may perform not good because they are less confident in these subjects, which lead to a lower sense of belongingness among girls and women in the STEM fields, then further strengthening the disadvantaged position of women in these fields (Leaper, 2014).

I have always been concerned with gender related topics, because in East Asia traditional gender roles still severely restrict women and many gender discriminatory speeches and behaviors are considered “normal”. In many cases, people are not sensitive enough to realize the impact or harm that gender discriminatory speech can do to others.

How to make people aware what gender discriminatory speeches are, and how to make them realize that gender discriminatory speech are not harmless jokes but can really cause psychological and physical harm to women, is a topic I have been thinking about.

PLAN

I have determined a target group of women between the ages of 18 and 36. This is an age phase people are faced with a variety of choices: majors to study, development of work, starting a family, balancing different roles, etc. (especially among women).

However, making choices means giving up other options, and in doing so, they are often subjected to pressure from surrounding opinions, with gender discriminatory speech playing a negative impact.

The following questions have been formulated to guide this project:

- How to define gender discriminatory speech?

- How to detect gender discriminatory speech?

- How to visualize gender discriminatory speech?

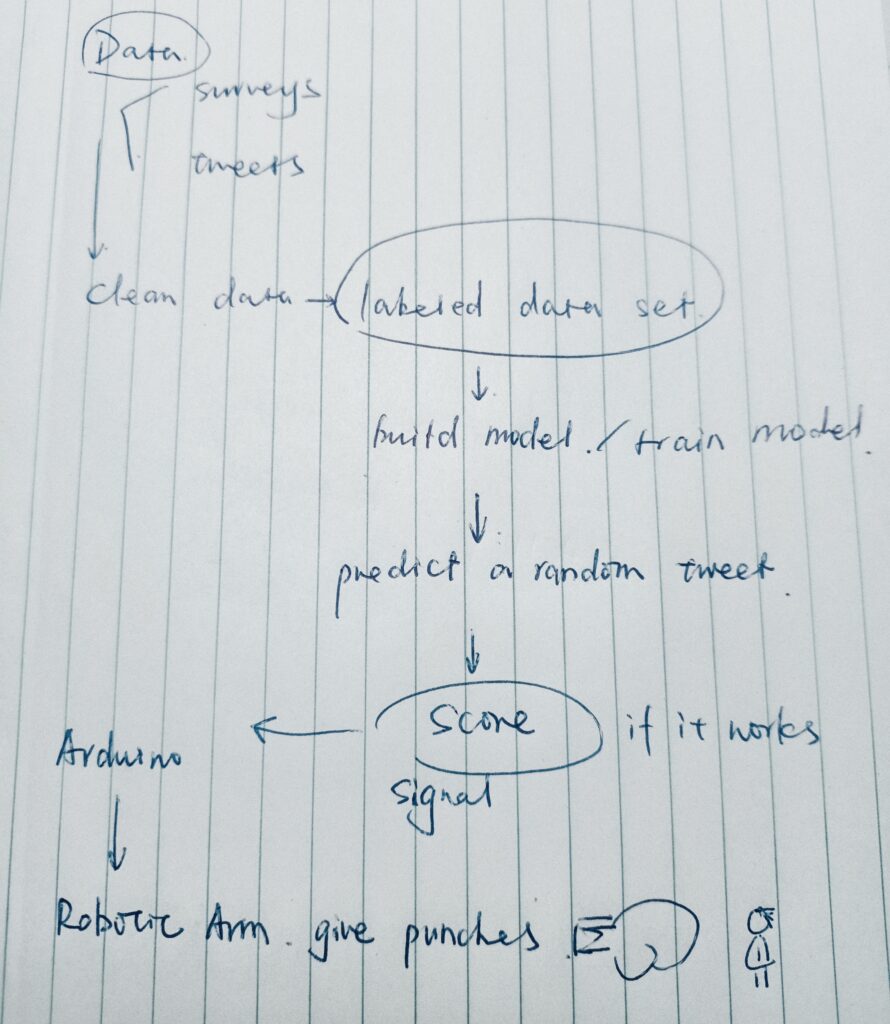

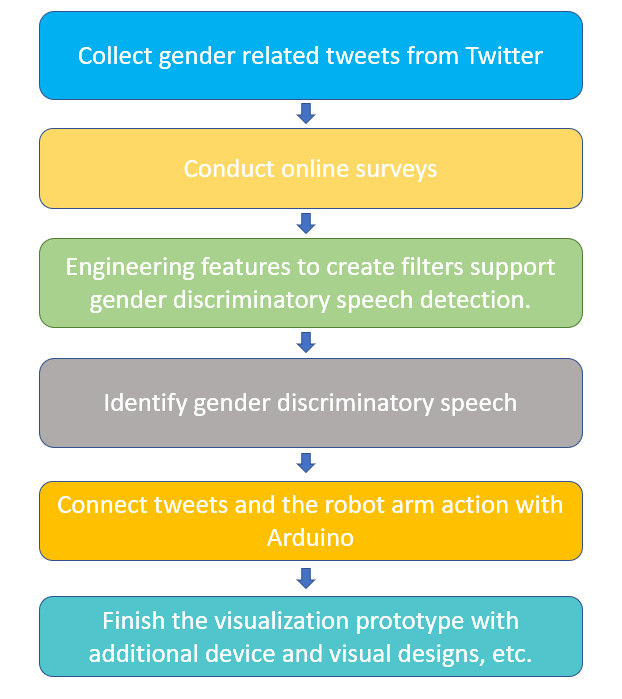

Order of action plan:

PROCESS

1. Data

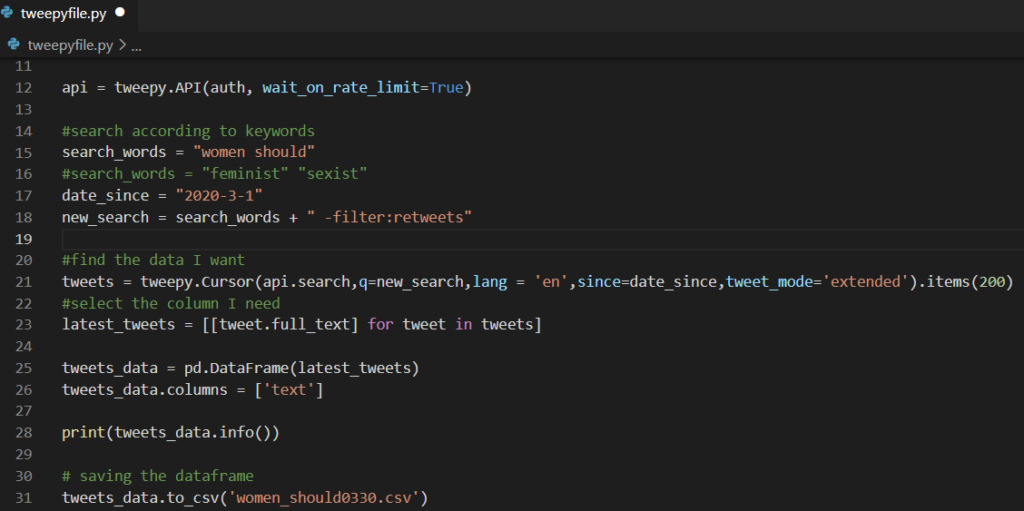

1.1. Collecting data

Explicit discriminative remarks are not as common on Twitter as on Chinese social networks. To ensure database size and make the project more inclusive, I collected data in both Chinese (survey) and English (Twitter) for this project.

Following related keywords are used to collect tweets:

In the surveys, participants provided discriminatory speeches they encountered at school, at work or in daily lives. The topics and forms of expression are similar to gender discriminatory tweets, so I combined them together for training.

1.2. Labelled data set

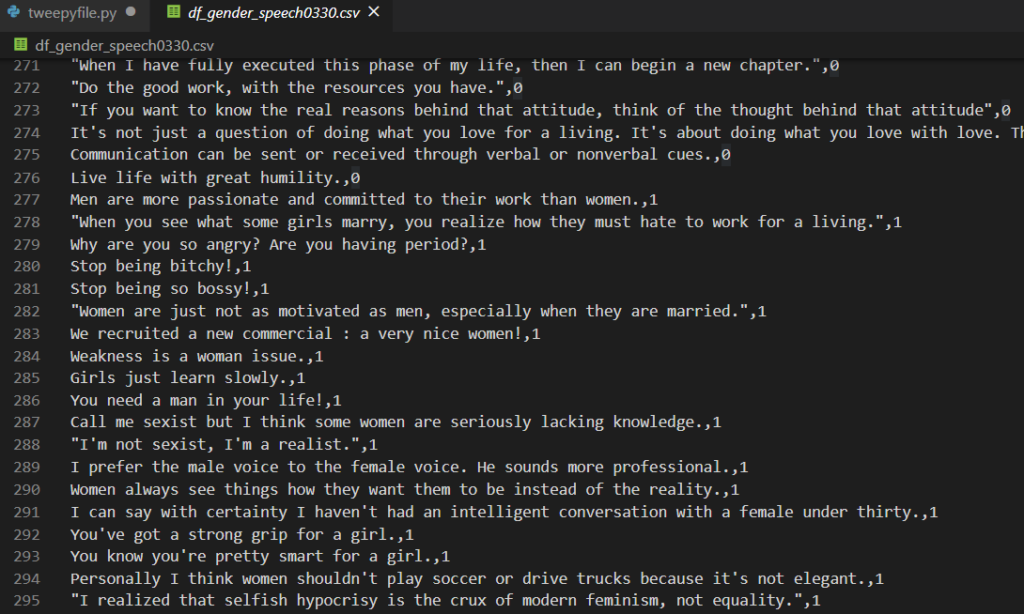

In this project, criteria for gender discriminatory tweets include: differential treatment due to gender, sexist remarks, and offensive stories or jokes (Brooks & Perot, 1991). Sexist remarks and offensive stories or jokes are all gender discriminatory speech.

I found out that many tweets are not really gender equality/discrimination related. Then I manually labelled tweets with 0 for non-gender discriminatory speech and 1 for gender discriminatory speech.

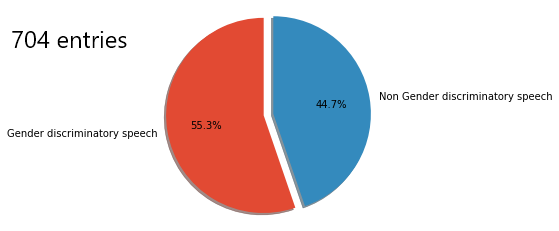

Till March 12th, I made a data set with 264 entries for the first iteration.

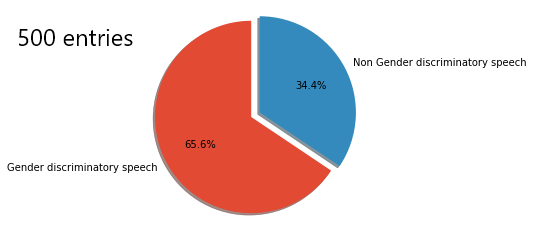

Till March 19th, I made a data set with 500 entries for the second iteration.

At the end of March, I made a data set with 704 entries for the third iteration.

Insight:

- Although according to my personal experience gender discriminatory speeches are not very common in real life in Western societies, they are still very much present on the internet.

- The gender discriminatory speeches collected from the surveys in Chinese compared to the statements collected on Twitter in English are highly similar.

2. DESIGN

In the early stages of this course, I was really impressed by the “smell of data” project (Wijnsma, 2014). As the Smell of Data is associated with data leakage it will be able to function as a warning mechanism. I want to make a project that associates gender discriminatory speech with a physical response.

At the beginning I considered using Arduino to make a noise, which can be demonstrated that gender discriminatory speech is difficult to ignore and really annoying.

But then I realized that the harm caused to women by gender discriminatory speech was more immediate, obvious and powerful. To make the “harm” more intuitive, I decided to use a robotic arm to give punches.

3. TECHNOLOGY

3.1 Machine Learning

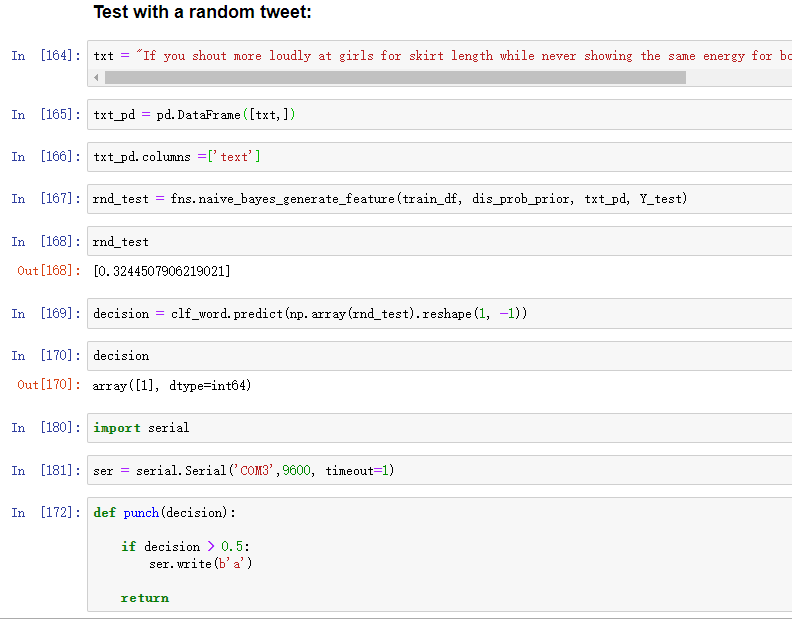

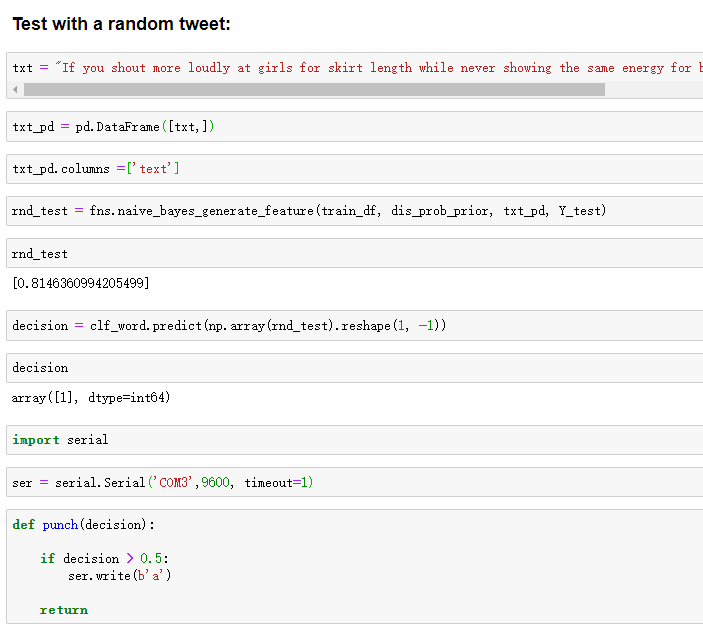

First iteration:

In the course FML, I participated in the AI project which focused on feature engineering and model building for fake news detection. Me and my groupmates had tried many features: “count of words in uppercase & lowercase”, “part of speech”, “sentiment analysis”, “word frequency”, “tagged bigram”, “readability grade”, “word embedding” etc. According to the result of our teamwork, the features with the highest correlation are : word frequency, stopwords, word count, uppercase count, and part-of-speech.

I’ve tried to build models with these features, however the uppercase count, word count and stopwords features did not perform well on my data set. I think it is because part of my data set comes from surveys, although the content is gender discriminatory speech, its language habits should not be adopted. So I decide not to use these three features.

Insight:

- For small dataset (264 entries) different sources of data can have a significant impact on the model prediction.

- It’s better not to conflate gender discriminatory speech and commonplace offensive language. As I noticed many people using f*ck, b*tch, sl*t in their tweets to describe their own daily lives.

Second iteration:

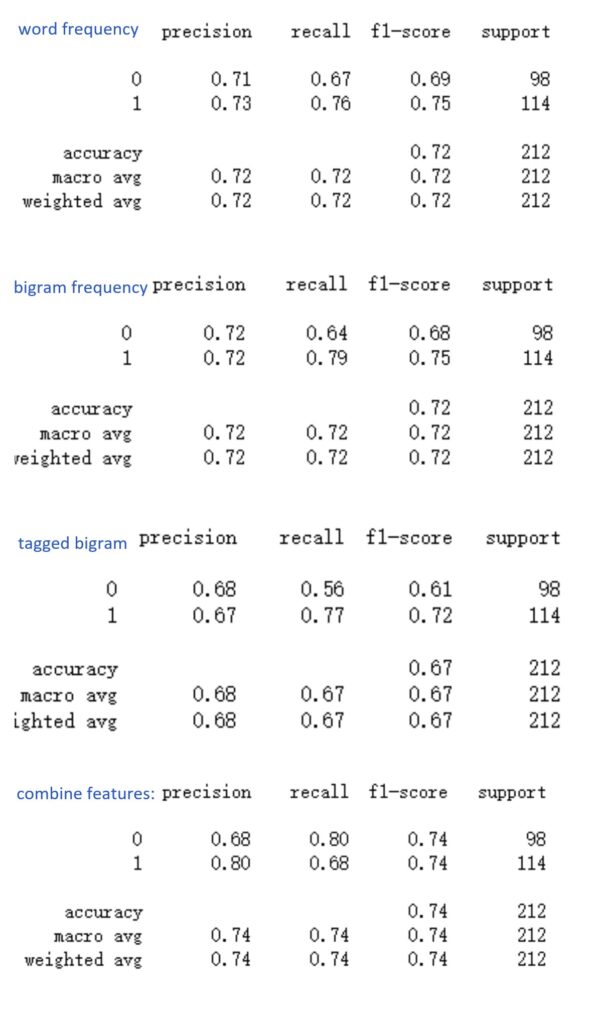

The features I wrote were :

- word frequency

- bigram frequency : Use nltk.bigrams to split the tweets text into bigram list.

- bigram tagging frequency: Use nltk.corpus to tagging bigrams into part of speech, then use the occurances of each type of tagged bigram as a feature.

Using model Naive Bayes + SVM.

Insight:

- The results of model prediction are quite good but for random tweets are not good. Maybe because the data set is still quite small.

- As many of the tweets from keyword searches are not gender related, I’ve selected some of the tweets to the data set. I should include more non-gender related tweets to keep the mechanism for generating random tweets closer to that of the dataset.

Third iteration:

The result of modle prediction are quite well but for random tweets are not good.

As many of the tweets from keyword searches are not gender related, I’ve selected some of the tweets to the data set. And prior-probability of discriminatory speech are quite high

I decide to include more non-gender related tweets to keep the mechanism for generating random tweets closer to that of the dataset.

Insight:

- Although the accuracy show a decrease, I tested random new tweets and the actual prediction was a little better in the third iteration,

- The possible reason is : the proportion of original labels in the data set has an effect on the predicted result. As I included more non gender discriminatory tweets, the prior- probability of discriminatory speech is lower than before (65.6% -> 55.3%), hence a lower baseline.

- But larger training set would allow more complicated models to work better, so there is still room for improvement as we collect more data.

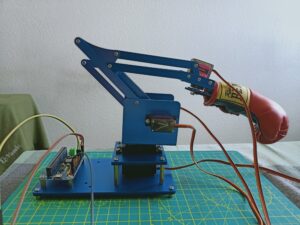

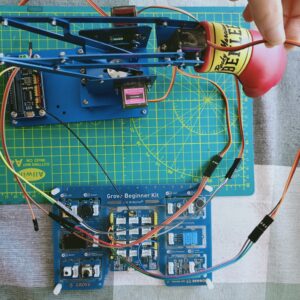

3.2 Robotic Arm

Assembly the robotic arm, then add a mini grove on the “pliers”.

3.3 Arduino

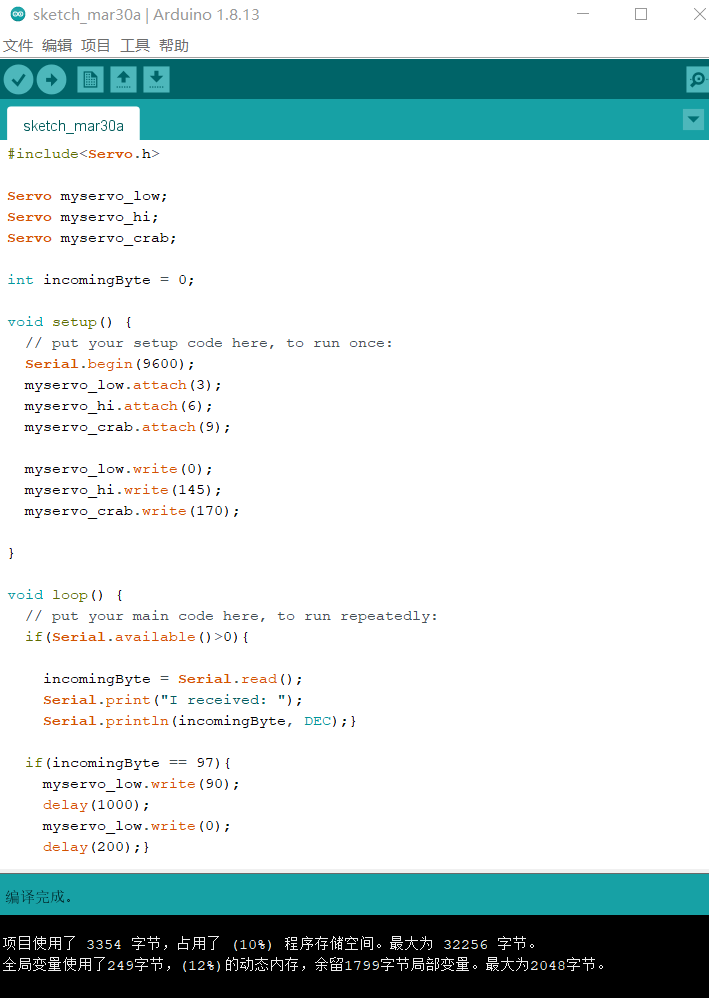

As marked in the image, 3,6,9 are the points to connect with Myservos on the robotic arm.

I’ve tried different angles to ensure the robotic arm can give a powerful straight punch.

Myservo_low is in charge of throwing punches.

Myservo_hi is to raise the fist. 145 is the angle I tested to be the best.

Myservo_crab is for holding the boxing glove. 170 is the angle at which the crab can hold the glove tightest.

3.4 Detect real time tweets, and sending response to Arduino

The coding and testing for real-time tweets detection is still ongoing. The coding will allow making repeated queries on Twitter based on the selected keywords, predict whether the tweets are discriminative, and send signal to Arduino to give punch where they are.

The query frequency would not make Twitter detect exceptional behavior (which would lead to banning developer id), but should also cover the keywords at a sufficient frequency.

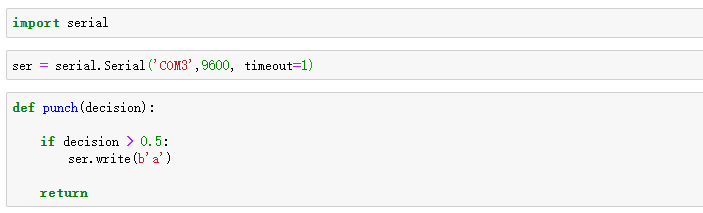

I’m still investigating the restriction of Twitter developer account to decide the best policy. The function for sending signal to Arduino is implemented as below:

When a twitter is detected as discriminative, python will send an “a” to Arduino via serial port, then Arduino will trigger the punch of the robot arm.

Result

Conclusion

Online, real-time data collection and interactive devices can make a very good combination in visualzing the damage inflicted by gender discrimination. It can shape a concept of online community into a real-world experience, bringing more “direct” feelings to the audience.

I built a deeper understanding of the situation of gender discrimination during building the prototype. Continuing reading a lot of gender discriminatory speeches made me feel sick. Therefore, I believe my efforts of visualizing gender discrimination is worthwhile.

There is not an ideal one-size-fit-all solution for natural language processing problems. A different model needs to be built for for each specific demand to achieve the desired result. Prediction performance of the model can also be further improved by building a larger training set.

The final outcome of the video does exhibit the damage, but can still be improved if a more powerful robotic arm is used. Although I’ve chosen a very soft plastic figure, the damage is still not obvious.

On how to continue this research:

In an ideal case, there should be a larger robotic arm to execute the punches, and give different levels of punching force/angle depending on the level of speech (blatant, covert, and subtle discrimination). And maybe provide figures of different colors and ages.

Training data set and machine learning part can also be improved.

We can also connect it to a voice system that reads out the tweets as it punches an audience in the face. If make it as an installation art, a large-scale robotic arm which connected with a voice system, will be more impactful. Machine vision should also be employed to control the fist position and avoid injury.

REFERENCE

Brooks, L., & Perot, A. R. (1991). Reporting sexual harassment: Exploring a predictive model. Psychology of Women Quarterly, 15, 31–47.

Davidson, T., Warmsley, D., Macy, Michael., Weber, Ingmar., (2017) Automated Hate Speech Detection and the Problem of Offensive Language, Proceedings of the Eleventh International AAAI Conference on Web and Social Media, 512-515

Leaper, C. (2014) Do I Belong? : Gender, Peer Groups, and STEM Achievement. International Journal of Gender, Science and Technology, Vol.7, No.2

Swim, J. K., & Campbell, B. (2001). Sexism: Attitudes, beliefs, and behaviors. In R. Brown & S. Gaertner (Eds.), The Handbook of social psychology: Intergroup relations (Vol. 4, pp. 218–237). Malden, MA: Blackwell.

Su, Q., Wan, M., Liu, X., & Huang, C.-R. (2020). Motivations, Methods and Metrics of Misinformation Detection: An NLP Perspective. Natural Language Processing Research, 1–13.

Wijnsma, Leanne. (2014) https://smellofdata.com/