“Accept everything about yourself – I mean everything. You are you, and that is the beginning and the end – no apologies, no regrets. ” ~Clark Moustakas

Premise

The digitization of mental health and emotions holds opportunities for accessible and ubiquitously available care, e.g., with conversational agents and chatbots.

Synopsis

This story started from the day that my daughter’s neck and an area on her arm were burnt; of course, after a while, she became better, and because she is just two years her skin recovered very quickly. But an important problem is: she was checking her hand every minute. It was obvious that she was thinking about it all the time. I chose this topic to help people with skin disorders adapt to their skin and body image problems. These people thinking about the impacts of their diseases on their appearance every day, even my 2 years daughter.

Because skin diseases are rarely life-threatening, more attention may be paid to more serious diseases. Skin diseases have a psychosocial effect that is often comparable to, if not higher than, those of other chronic medical conditions. According to the British Association of Dermatologists, 85 percent of patients with skin disease report that their disease’s psychosocial effects are a significant component of their illness, which is a concerning statistic.

Substantiation

A person’s physical and mental well-being are influenced by their skin, while a disfiguring appearance is linked to body image concerns (Tomas-Aragones & Marron, 2014). Body image has an impact on our feelings, thoughts, and behaviors in daily life. The promotion of positive body image is important for improving people’s quality of life, physical health, and health-related behaviors, so it is highly recommended.

Given the reluctance of people with skin conditions to seek psychotherapy, as well as the benefits of accessibility and dissemination, internet-based interventions are a feasible alternative for providing therapeutic interventions to this population. In general, internet-based interventions have been shown to be effective in solving body image issues. I will work on chatbots for helping to improve their body image as my graduation topic. But now for this course, my aim is to find ways to make chatbots more empathic.

The interaction between human and computer(HCI) would become much more natural if a computer could recognize and adapt to the emotional state of the human. Indeed, automated systems that can detect human emotions can enhance human-computer interaction by allowing computers to customize and adapt their responses(Clavel, 2015). My goal in this study is to create a prototype that detects emotions, integrates these emotions with a chatbot, and gives responses to the user through the chatbot.

Prototyping

Facial emotions detection

During the prototyping steps, first I tried to learn computer vision and deep learning to detect emotions from the face. Deep learning methods through machine learning models with multi hidden layers, which are trained on massive volumes of data, could learn more useful features and thus improve the accuracy of classification and prediction(Wang, et al. 2014). I used Ian Goodfellow’s inspiring book “DEEP LEARNING” to learn deep learning in this project. Convolutional neural networks (CNNs) are a category of neural networks specialized in areas such as image recognition and classification. The Ekman emotion model, which defines six basic human emotions: rage, disgust, fear, happiness, sadness, and surprise, is a common categorical model(Kim, 2014). Ekman’s emotion model has been used in a variety of experiments and in a number of systems that recognize emotional states from facial expressions.

Dataset

The Facial Emotion Recognition 2013 (fer2013) dataset was created by Pierre Luc Carrier and Aaron Courville and was introduced in the ICML 2013 workshop’s facial expression recognition challenge (Goodfellow, et al, 2013). In total, the dataset consists of 35887 facial images. I used fer2013 dataset for training the model for predicting facial emotions.

example images of fer2013 dataset

Implementing the model

After finding the appropriate dataset and data exploratory analysis, now I had a pre-trained model for detecting emotions. I wrote the code in Keras open-source library for python, the most simple library for Tensorflow.

Then I used OpneCV and haarcascade_frontalface_default.xml to work with the webcam in real time and face detection process. Object Detection using Haar feature-based cascade classifiers is an effective object detection method proposed by Paul Viola and Michael Jones in their paper, “Rapid Object Detection using a Boosted Cascade of Simple Features” in 2001. OpenCV comes with a trainer as well as a detector. If you want to train your own classifier for any object like a car, planes, etc. you can use OpenCV to create one. Its full details are given here:

I also used this tutorial for understanding face detection process in OpenCV by using haarcascade:

https://youtube.com/watch?v=WfdYYNamHZ8%3Ffeature%3Doembed

I ran my code in flask and virtual environment to use my webcam in real time.

recorded output by zdsoft recorder(the title of the video is related to zdsoft recorder)

Reflection and user testing

The usage of facial emotions in my project has some limitations:

- we need a log of emotions during the time for understanding users’ emotions appropriately. By detecting emotions in seconds we cannot understand true emotions in a longest time

- Our focus in this project is on a vulnerable group, so we need permission for tracking their face during the chat.

Then I decided to detect emotions also by text-based sentiment analysis.

Text-based emotions detection

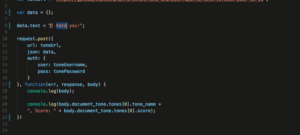

the code for experimenting tone analyzer api

![]()

the response of the code: anger is detected

Reflection

Tone Analyzer’s accuracy in finding emotions from the text is so much high. For integrating this technology with IBM Watson I found a tutorial in Github. However, this tutorial was made in 2019 but it was completely different from today’s tone analyzer environment.

Experimenting IBM Watson assistant

For experimenting IBM Watson assistant I went through the tutorial provided during the course. Fill intents and entities based on scenario that I wrote during an interview I had with my friend, that is a psychologist and also found during my research.

conclusion

References

Working Party Report on Minimum Standards for Pyscho-Dermatology Services (2012). http://www.bad.org.uk/shared/get-file.ashx?itemtype=document&id=1622.

Tomas-Aragones, L., & Marron, S. (2014). Body Image and Body Dysmorphic Concerns. Acta Dermato Venereologica, 0. https://doi.org/10.2340/00015555-2368

Clavel, C. (2015). Surprise and human-agent interactions. Rev. Cogn. Linguist. 13(2), 461–477

Kim, Y.(2014). Convolutional neural networks for sentence classification. arXiv:1408.5882

Wang, W., Yang, J., Xiao, J., Li, S., Zhou, D. (2014). Face recognition based on deep learning. In:

International Conference on Human Centered Computing, pp. 812–820. International

Publishing, Springer

Goodfellow, I.J., Erhan, D., Carrier, P.L., Courville, A., Mirza, M., Hamner, B., Zhou, Y. (2013).

Challenges in representation learning: A report on three machine learning contests. In:

International Conference on Neural Information Processing pp. 117–124. Springer, Heidelberg