PREMISE

Detecting body language during the online interview using deep learning for image classification.

SYNOPSIS

Recruiting is a more complex activity than most candidates think it is. It does not just involve answering the “right” questions related to the job post, it is about making the recruiter know you better so you can land the job. While discussing this with one of the HR experts, the head of recruitement at Ginovi GmbH Frankfurt, she emphasized how essential is face-to-face communication in the digital world. Graduates often believe that if the right things are said during a job interview, the possibility of getting the job is high. During an interview, we practice our responses to interview questions, but often forget that our body movements communicate as well as our answers. Since many recruiters believe that body language can often make or break job prospects, I will prototype a tool that helps candidates better be aware of their body language during an interview.

PREFACE

The digital age has contributed to short attention spans, especially among the young. While they plan to enter the workforce, their first impressions let interviewers make decisions about them within the very first minutes of the meeting, before they even say a word, just through their body language. Recruiters often perceive and interpret our body language rapidly and correctly, and are often the product of an unconscious process, which makes them difficult to fake (Knapp & Hall, 2009). For instance, applicants who use more immediacy nonverbal behavior (i.e., eye contact, smiling, body orientation toward the interviewer) are perceived as being more hirable, more competent, more motivated, and more successful than applicants who do not (Imada & Hakel, 1977). In addition, employed applicants make less unfavorable body language, more direct eye contact, smile more, and nod more during the job interview than applicants who are rejected (Forbes & Jackson, 1980).

Based on that, I looked as well into how machine learning can help analyze nonverbal behavior (Nguyen, Frauendorfer, & Schmid Mast, 2014). Such studies focused on the prediction of interest (Wrede & Shriberg, 2003), dominance (Jayagopi, Hung, Yeo, & Gatica-Perez, 2009), emergent leadership (Sanchez-Cortes, Aran, Schmid Mast, & Gatica-Perez, 2012), and personality traits (Pianesi, Mana, Cappelletti, Lepri, & Zancanaro, 2008) from real-time data in a small group. My work on the other hand will focus on the use of automatic feature extraction and machine learning to infer social behavior during a job interview.

Will graduates be able to ultimately control their body language after using this prototype? Many argue that even if people are not always fully aware of their nonverbal behavior, they are still able to regulate it, especially for self-presentation purposes (Stevens & Kristof, 1995). Such awareness can give an edge for graduates in control of their body language to get hired.

PROTOTYPE

The first step was to check how machine learning techniques have been used to automatically extract nonverbal cues. I have tested some of the Convolutional Neural Network (CNN) in Python using the Tensorflow library. For testing purposes, I have uploaded several photos that show images of people showing signs of frustration, dishonesty, boredom, and defensiveness. I have tested this with my own set of new photos, and mimicking such head gestures and body postures. Although the results were pretty much accurate, I wanted to have a real-time tool that automatically records me while changing my gestures, instead of manually uploading a photo of myself every time. There are available online tools such as Lobe, but it does not check in real-time the accuracy of each gesture class while the camera is open. However, it was a tool to test if our concept works. For example, it shows either you are bored or frustrated without showing the percentage accuracy for this prediction in real-time.

First Iteration

In the first iteration, I wanted to start with some of the gestures that could be shown during an online interview, focusing on the upper body part. I have identified, based on research and HR blogs, four main gestures, two related to poses, and two related to hands movement on the face:

- Dishonest: Touching the nose

- Frustrated: Touching the neck

- Bored / not interested: Moving head from side to side (side bending)

- Defensive: Crossed arms

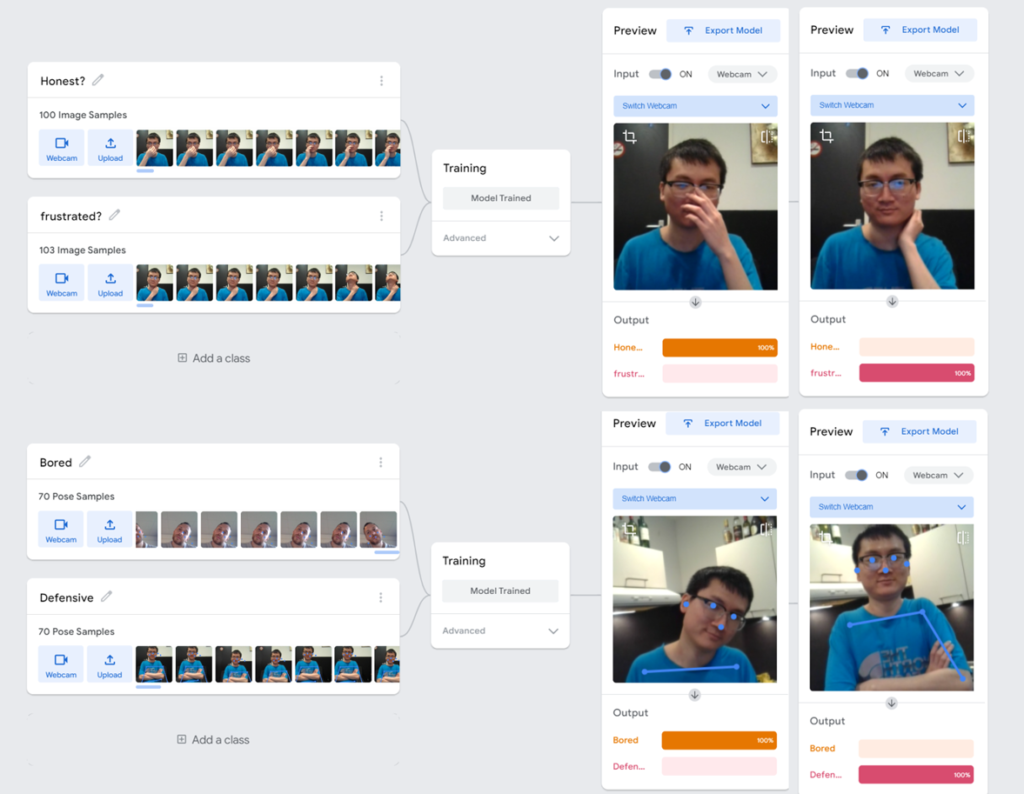

I then found a very interesting tool called “Teachable Machine”, which is a web application that makes it fast and easy to create machine learning models for your projects. I have created two projects, one for the poses where I trained around 70 samples per class, and another project for the facial part where I trained around 100 sample images for each class

Samples were taken from pictures of me, along with others who are in their late university studies and will graduate soon. I chose a combination of different nationalities (Spain, Germany, Lebanon, Turkey, Poland, Vietnam) to diverse the training data.

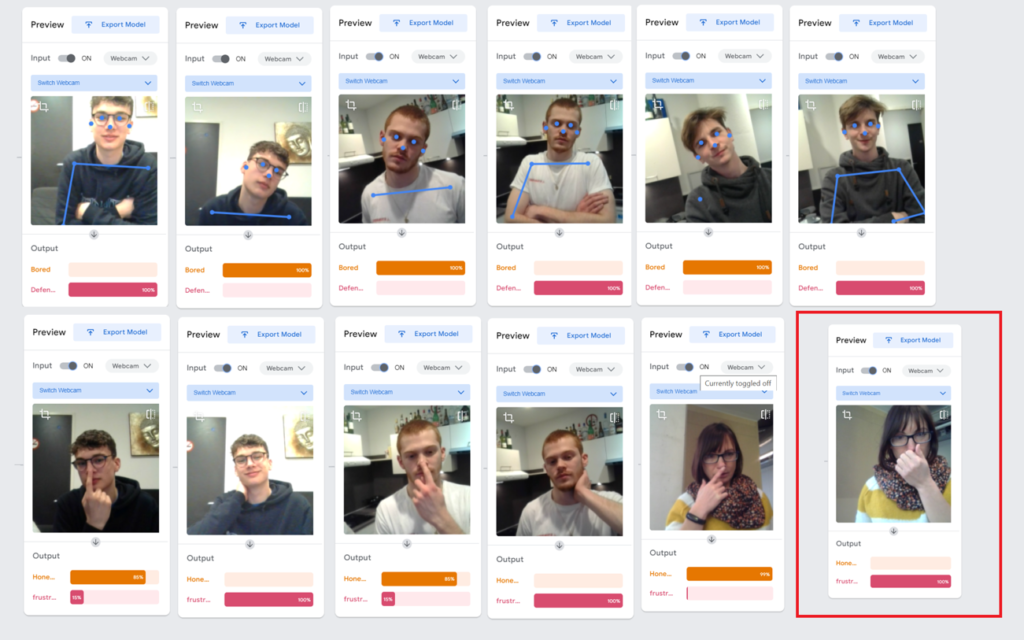

For the first attempt, this model seems to be working very well. But now, let us test it on users outside of our training data set.

As shown, it pretty much worked well for all attempts except for one (in the red box) as the algorithm may have not been trained on a similar type of data (woman wearing a scarf and sunglasses). To solve this, we included this volunteer gesture in our training data, and the algorithm correctly afterward predicted the results.

Second Iteration

It is necessary at this level to have one application of multiple classes, instead of having two separate applications (one for head pose and another for face/hand expressions).

For that, we have included 3 classes in this one app, splitting the training images into around 90 images per class as below:

- Bored: Head bending

- Insincere: Touching the nose

- Defensive: Crossing the arms

This gave us better convenience to combine the head and facial body language in one interface. I have decided still not to include the “Frustrated” class for now until it is tested with users.

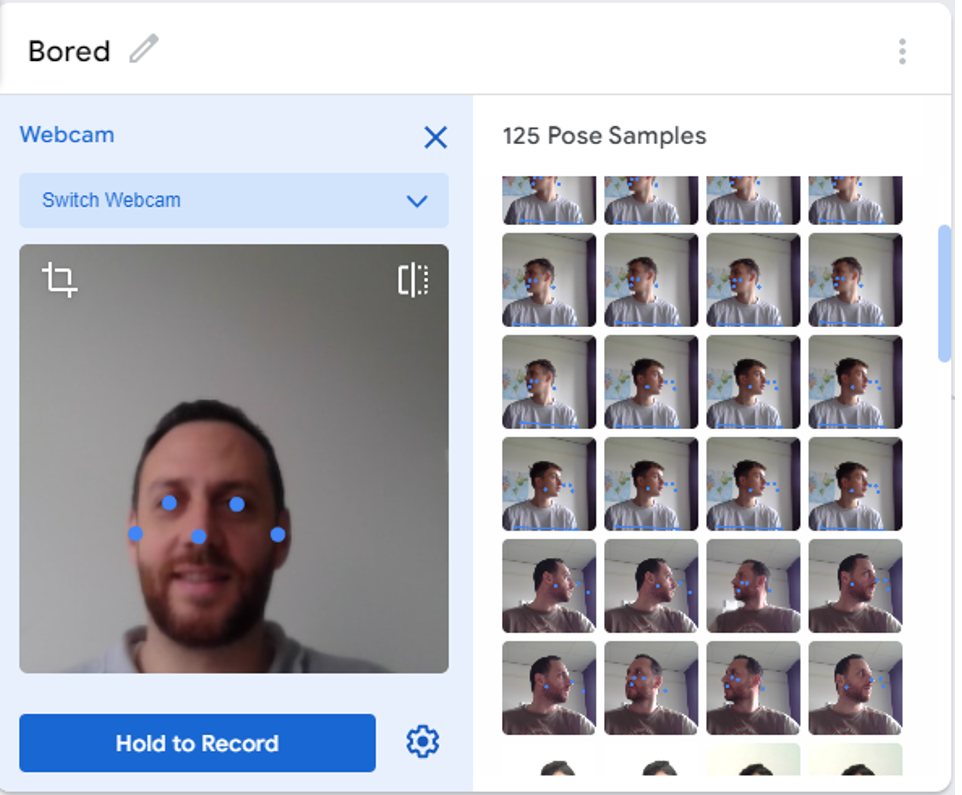

At this stage, it was necessary to dig deep into those gestures and verify how graduates behave when they are bored during an interview. For that, I did a small experiment with several students, and I read a very boring article for 10 minutes in a one-to-one session and recorded their body language to see how each one behaves. The students were of different nationalities: German, French, Polish, Russian, and Spanish.

We have noticed that some express their boredom by not only bending their head as assumed above but also while moving it from side to side (looking away from the camera). Accordingly, we have added this posture to our “Bored” classification.

In addition, it was logical to add a “default” class for candidates so the algorithm wouldn’t put the normal gesture (looking into the camera, smiling) into one of those three categories. Now we have a total of 4 classes.

Third Iteration

At this stage, it was necessary to make the candidates engaged. I planned an interview-like session to get them speak while answering 3 interview questions and lying in one. I then recorded their body language and later asked them to highlight which question they lied in. This will give me a visibility of how each one behaves while being dishonest and how they will unconsciously express it.

As seen above, dishonesty could be expressed differently, in multiple forms, among genders and people of different culture. Based on that, we found that girls either scratched their heads or touched their ears while they were lying in one of their answers. Touching, rubbing the head, or scratching the ears is a self-soothing gesture that may happen when a person feels nervous or anxious (Van Edwards, 2019). Also having a hard time looking at the camera is another sign of being stressed while lying. Accordingly, we added 5th class for “stressed” and included those two gestures in its training data. We then tested it all as seen below:

Going forward, and inorder to encode the relational characteristics of nonverbal behaviors, I then combined audio and visual cues. Based on our observations and participants’ feedback, it is vital to interpret the body language in the context of the respondent’s answers and tone. For example, while a candidate could control his body language when lying, her answers at that moment might make no logical sense. This is where we included an automatic text transcript tool that shows the candidate’s answer while making any of the defined gestures, just by uploading the interview video.

Through this, we can interpret the body language in relation to the candidate’s answers and tone. This will provide candidates with a view of how and where did they make a specific body movement, in relation to their answers.

REFLECTION

The reaction of candidates differs based on their gender and culture. While candidates from France, Spain, Russia showed expressive body language and could be easier to interpret, students from Germany and Poland showed more control to their gestures. This cannot be generalized as much more studies should be done to have more insights.While the prototype functions well in situations of relative normality, the model struggles to deal with face expressions such detecting eye contact.

CONCLUSION

The prototype is a tool that helps students be aware and provide a positive message to the recruiters by eliminating negative gestures. It is interesting to include in future work the positive gestures like direct eye contact, sitting straight, smiling, nodding, and others. In addition, text analysis can be included to analyze the answers of the candidates while responding to questions, so that the tool analyzes not only the body language, but also the tone of the answers.

REFERENCES

Edinger, J., & Patterson, M. (1983). Nonverbal involvement and social control. Psychological bulletin, 30-56.

Forbes, R., & Jackson, P. (1980). Non-verbal behaviour and the outcome of selection interviews. Occupational Psychology, 65–72.

Gatewood, R., Feild, H., & Barrick, M. (2011). Human resource selection. Mason, 1-6.

Gatica-Pere, D. (2009). Automatic nonverbal analysis of social interaction in small groups. Image and Vision Computing, 1775–1787.

Google, T. (n.d.). Teachable Machine. Retrieved from Teachable Machine by Google: https://teachablemachine.withgoogle.com/

Imada, A., & Hakel, M. (1977). Influence of nonverbal communication and rater proximity on impressions and decisions in simulated employment interviews. Applied Psychology, 295–300.

Jayagopi, D., Hung, H., Yeo, C., & Gatica-Perez, D. (2009). Modeling dominance in group conversations using nonverbal activity cues. Audio, Speech, and Language Processing, 501–513.

Knapp, M., & Hall, J. (2009). Nonverbal communication in human interaction. Wadsworth, Cengage Learning, 7th edition.

Nguyen, L., Frauendorfer, D., & Schmid Mast, M. (2014). Hire me: Computational Inference of Hirability in Employment Interviews Based. IEEE Transactions on Multimedia, 2-5. Retrieved from https://www.researchgate.net/publication/262344028

Pianesi, F., Mana, N., Cappelletti, A., Lepri, B., & Zancanaro, M. (2008). Multimodal recognition of personality traits in social interactions. Int’l Conf. on Multimodal Interaction (ICMI).

Sanchez-Cortes, D., Aran, O., Schmid Mast, D., & Gatica-Perez, D. (2012). A nonverbal behavior approach to identify emergent leaders in small group. IEEE Transactions on Multimedia, :816–832.

Stevens, C., & look Kristof, A. (1995). Making the right impression: A field study of applicant impression management during job interviews. Journal of applied psychology, 587-606.

Van Edwards, V. (2019). 7 Ways Body Language Will Give You Away – Ear Body Language. Retrieved from SCIENCE OF PEOPLE: https://www.scienceofpeople.com/ears-body-language/

Wrede, B., & Shriberg, E. (2003). Spotting ”hot spots” in meetings: Human judgments and prosodic cues. Proc. Eurospeech.