Premise

To what extent can computer vision improve the situational and attitudinal barriers of therapeutic journaling for young female adults who seek to maintain or develop a healthy mental state?

Synopsis

With the rise of mental health issues, young female adults (as myself ) are increasingly more aware of maintaining a healthy mental state (Choudhry et al., 2016). Therapeutic journaling, but also computer vision and photography hold potential to maintain a healthy mental state. This study incorporates the corresponding opportunities and explores a possible increase in efficiency and accessibility, overcoming the barriers of journaling, by experimenting in 3 prototype iterations. The goal is to mark potential challenges, substantiate the concept with potential users and get familiar with different computer vision features that might be of interest for this research problem space.

Substantiation

Mental health conditions are increasing worldwide, especially among women. Their differences in hormone profiles affect mental health disorder risks, and are therefore a population of interest (Zender & Olshansky, 2009). Awareness is rising, however, mental health care is still a neglected area in developed countries (Choudhry et al., 2016). The clinical workforce is not able to meet the new demand and attitudinal barriers such as stigma toward professional treatment lead to preferences for self-help (Wasil et al., 2021).

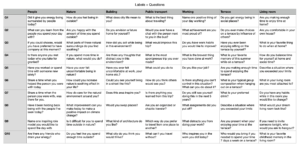

This study approaches journal writing as a method to increase access to self-care for the mental state. Journal writing is a mechanism to capture, interpret, and analyze personal experiences in order to facilitate reflection, learning, and interpersonal development (Kelley et al., 2015). It is performed in professional treatments and as self-care. Women, however, experience attitudinal (self-doubt, lack of trust in the process, and fear of exposure) and situational barriers (circumstances that can impede participation such as time) to perform journal writing. Both barriers are exacerbated by self-doubt and fear which can be encouraged by given specific exercises or guiding questions (Kelley et al., 2015).

Young adults are likely to use their (smart)phone daily, among others to capture and hoard images to preserve everyday moments (Richmond, 2022). Moreover, they show interest and actively use online technologies to seek information and help about their mental health (Naslund & Aschbrenner, 2021). This study explores the potential promise of using images and computer vision to overcome the previously discussed barriers of journal writing. Computer vision cannot extract all personal emotional ties. However images can transport us back to a moment in time (Richmond, 2022). Moreover, the computer vision can extract relevant information from images by detecting e.g. places and colors (SWAIN & BALLARD, 2002). Therefore, these experiments help to confirm or disgard these suggested opportunities.

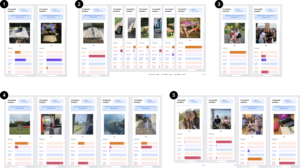

Iteration 1

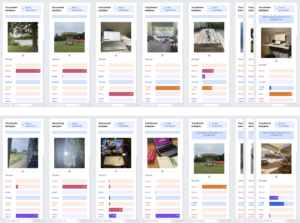

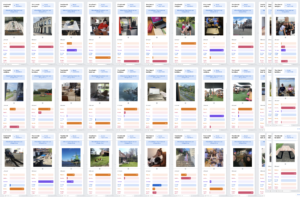

This first prototype is made in Teachable Machine, a web-based tool for machine learning, and chosen for its accessibility. The goal is to test different features the model could classify. Here, the distinction between multiple environments is emphasized. An existing dataset is used, complemented with images scraped from google to increase size and variety.

Insights

- Difficulties edge features: attraction (1) is seen as nature because of its organic edges. Also, this feature probably confuses the classification of ‘living room’, ‘terrace’ and ‘office’ because of the sharp edges of tables (2).

- Scene classification problem: difficulties with high-level semantic concepts describing an image as a whole. To exemplify, it recognizes ‘humans’ in the image (3) instead of the overall semantic.

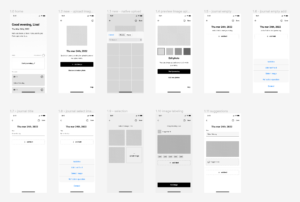

Additionally, the concept of using computer vision technology and photography is tested. This experiment includes a wireframe, tested with a convenience sample of 3 women. They shared three images with similarity to the trained labels in order to test the accuracy of the model. In addition, they got to choose the remaining 3 to let them experience capturing their day freely.

Insights

- Accuracy increases trust in the proces. However, including a level of control would (to a certain level) comprise any false suggestions.

- Flexibility is key. Capturing the day attainable, however it is not preferred to be the sole way to start this process (value of time). Moreover, being assigned a question (correct but not of interest) would be experienced as an obstacle and thus personalization and control are mandatory.

- The value of technology supporting the process is acknowledged, however attitudinal (fear) and situational factors (a moment to sit down), should not be forgotten.

Iteration 2

Iteration 2 is a follow up on the insights gained in the first iteration. Edge and scene classification problems are intended to be improved by filling data gaps to give more weight to certain use cases. To exemplify, adding more data with less straight edges to labels as living room and terrace. Additionally, more data (people) will be added to all labels to even out with public transport.

Insights

- Improved nuance and overall semantic because of variety (1,3,5).

- Selection bias (2): the dataset used for training the model might be skewed because of a gap in variety of table features. This encounters in the model where picknick tables are not always recognized.

- Remaining difficulties (5): composition lines still sustain the scene classification problem. Moreover, data gaps trouble the model with an unclear distinction between “table settings” and the framing of a scene.

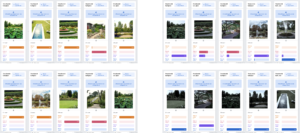

Another follow up is to explore the interaction and concept of using “stock photos” to increase flexibility. For this I wanted to test to combine 2 labels to increase generality and detecting overall semantic. New data for labeling nature and building was added. Additionally, transparency and attitudinal barriers were discussed by the use of labels on the basis of wireframes.

Insights

- Including images with nature and buildings have improved the nuance in classification. Remaining obstacles for the model to increase accuracy are most probably related to selection and measurement bias. Adding data for framing, house type and size might tackle this problem. In general, it is thus important to enlarge the representative dataset and in future work experiment more to confuse the model to map out struggles.

- Users prefer to have some control to the process of extracting information out of images. However, time is essential and therefore giving feedback is preferred in stead of adjustments.

Prototype – iteration 3

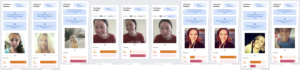

The third iteration is an exploration of the features that could possibly guide questions by detecting mood and mental state from images. First, the goal is to see how the model reacts to different HSV values. A subset of nature labeled images was duplicated with 4 unique HSV levels, to explore classification by color features. Users were asked to capture 1 selfie, 1 group photo and 1 image of choice (incl. HSV choice) and share prior to the test. These images were tested together with the researcher in a walkthrough.

Insights

- Context of photography: natural contrast and lightning (from weather situations) make every image different. Now, some images are quite alike even though it has a different filter. Could potentially be solved by a bigger contrast between the “filters” or to equalize every image.

- Give users limited options: in order to decrease the choice and information overload and increase situational barriers.

- A strategy is needed to separate mental health signals from other objectives.

- While this feature increases trust in the process, fear of exposure and privacy concerns increase.

Secondly, a new dataset with images of selfies, group images and facial expressions is explored to detect mood with the main aim to map future challenges. The dataset included a mix of self-captured selfies with the camera feature and scraped images from the internet.

Insights

- Occlusion confuses the model.

- Results are difficult to continuously track when moving, and therefore even more difficult to use get accurate output when using the camera.

- Incomplete emotions: the camera feature confuses the model with movements and incomplete actions as input.

- While this feature increases trust in the process, fear of exposure and privacy concerns increase.

Conclusion

The prototype results suggest that users might overcome situational barriers provided that the input type is flexible and choices are limited. In addition, attitudinal barriers (fear of exposure) could occur while journaling in public or engaging with features such as emotion detection that feel personal. Moreover, further research is necessary to understand the user’s perceptions of image classification in relation to guided questions and occasional suggestiveness. Hence, according to these insights; control, privacy and transparency are paramount values.

Furthermore, implementing a computer vision model requires the acknowledgement of abstract scenery and the familiar scene classification problem, with an emphasis on including a variety of images with edge, texture and color features. Additionally, data gaps and occlusions will influence accuracy (e.g. framing and incomplete emotions). And finally, the strategy should include the several biases, that are applied in this research and in further research (e.g. selection bias). Thus, a clear and critical scope is required to successfully execute on further development.

References

Choudhry, F. R., Mani, V., Ming, L., & Khan, T. M. (2016). Beliefs and perception about mental health issues: a meta-synthesis. Neuropsychiatric Disease and Treatment, Volume 12, 2807–2818. https://doi.org/10.2147/ndt.s111543

Eckstein, M. P. (2011). Visual search: A retrospective. Journal of Vision, 11(5), 14. https://doi.org/10.1167/11.5.14

Kelley, H. M., Cunningham, T., Branscome, J., & ACA Conference. (2015). Reflective Journaling With At-Risk Students. https://www.counseling.org/knowledge-center/vistas/by-year2/vistas-2015/docs/default-source/vistas/reflective-journaling-with-at-risk-students

Khan, A. I., & Al-Habsi, S. (2020). Machine Learning in Computer Vision. Procedia Computer Science, 167, 1444–1451. https://doi.org/10.1016/j.procs.2020.03.355

Naslund, J. A., & Aschbrenner, K. A. (2021). Technology use and interest in digital apps for mental health promotion and lifestyle intervention among young adults with serious mental illness. Journal of Affective Disorders Reports, 6, 100227. https://doi.org/10.1016/j.jadr.2021.100227

Pennebaker, J. W., & Beall, S. K. (1986). Confronting a traumatic event: Toward an understanding of inhibition and disease. Journal of Abnormal Psychology, 95(3), 274–281. https://doi.org/10.1037/0021-843x.95.3.274

Peterson, E. A., & Jones, A. M. (2001). Women, journal writing, and the reflective process. New Directions for Adult and Continuing Education, 2001(90), 59. https://doi.org/10.1002/ace.21

Reece, A. G., & Danforth, C. M. (2017). Instagram photos reveal predictive markers of depression. EPJ Data Science, 6(1). https://doi.org/10.1140/epjds/s13688-017-0110-z

Richmond, S. (2022, March 3). How many photos do young people actually take? The Independent. Retrieved June 6, 2022, from https://www.independent.co.uk/life-style/photographs-young-people-poll-b2027607.html

Rodriguez-Villa, E., Rauseo-Ricupero, N., Camacho, E., Wisniewski, H., Keshavan, M., & Torous, J. (2020). The digital clinic: Implementing technology and augmenting care for mental health. General Hospital Psychiatry, 66, 59–66. https://doi.org/10.1016/j.genhosppsych.2020.06.009

SWAIN, M. J., & BALLARD, D. H. (2002). Color Indexing Received January 22, 1991. Revised Jane 6, 1991. Readings in Multimedia Computing and Networking, 265–277. https://doi.org/10.1016/b978-155860651-7/50109-1

Wasil, A. R., Palermo, E. H., Lorenzo-Luaces, L., & DeRubeis, R. J. (2021). Is There an App for That? A Review of Popular Apps for Depression, Anxiety, and Well-Being. Cognitive and Behavioral Practice. https://doi.org/10.1016/j.cbpra.2021.07.001

Zender, R., & Olshansky, E. (2009). Women’s Mental Health: Depression and Anxiety. Nursing Clinics of North America, 44(3), 355–364. https://doi.org/10.1016/j.cnur.2009.06.002

Zogan, H., Razzak, I., Wang, X., Jameel, S., & Xu, G. (2022). Explainable depression detection with multi-aspect features using a hybrid deep learning model on social media. World Wide Web, 25(1), 281–304. https://doi.org/10.1007/s11280-021-00992-2