Premise

Application processing content created by divers to help with marine species monitoring.

Synopsis

Every year underwater ecosystems decline, influenced by damages such as climate change, overfishing and pollution. According to UNESCO, more than half of marine species may extinct by the year 2100 (Facts and Figures on Marine Biodiversity | United Nations Educational, Scientific and Cultural Organization, n.d.). In order to help preserve these biodiverse environment, I wanted to make use of content created by divers to monitor species.

Introduction

A report from The World Wide Fund for Nature (2015) indicates a nearly 49% decline in marine life populations between 1970 and 2012. Over the last three decades, tropical reefs lost over the half of reef-building corals. 25% species of sharks, rays and skates are threatened with extinction (The World Wide Fund for Nature, 2015). One way to prevent underwater world from dying is detecting factors, before environment is fully damaged. Statistics from PADI indicates that since 1967 to 2018 company certified 27 million divers (PADI, 2019). Many of them takes underwater pictures and share it on social media. Analysing uploaded content could help monitor existing species and classify new ones. Collected data from reefs is essential to better inform scientists, management and governments.

Research

Current ways of monitoring

There are multiple ways of reef monitoring involving humans, satellite based remote sensing, fully autonomous vehicles with cameras and others. One of them is Manta tow, where observer is dragged by a boat alongside the reef. A visual assessment is made by an observer when the boat stops (Reef Monitoring Sampling Methods | AIMS, n.d.). Another method is Intensive SCUBA surveys, where scuba diver takes a picture every 50m on the reef. Pictures are further analysed in a lab in terms of density of corals, coral bleaching, fish abundance and species diversity. The Global Ocean Observing System is coordinating a collaborative system of observations that allows scientists to make comparisons where all the data are collected by observing groups and specialists. Local communities and diver volunteers are also involved in reef monitoring, in 2010 over 500 reefs were surveyed, in 2020 and 2021 they are doing it again (2020 Lap of Australia, 2020).

Image recognition experiments

Pictures taken by divers are very often uploaded on portals such as Facebook and Instagram. I wanted to use image recognition to recognise the species and present the data over time. I conducted experiments and try to teach an algorithm species as:

- Tiger Shark

- Giant Manta Ray

- Lionfish

- Blue spotted ribbontail ray

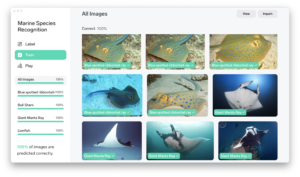

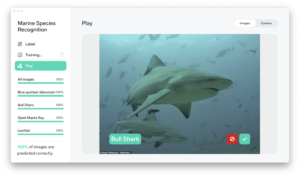

Lobe

Lobe quickly learned the species. I thought I will try to make it more tricky so I added a Bull Shark. The difference between a Bull Shark and a Tiger Shark is size, colour and shape of caudal fin. Although, it was not a problem for Lobe to recognise these two species of shark.

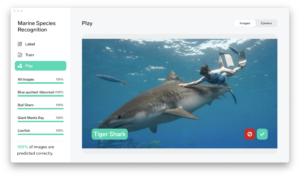

Teachable Machine

Teachable Machine worked as good as Lobe. Model based on that small data set worked and prediction was correct. Teachable Machine allows to export the model to code snippets, which can be further used in a project.

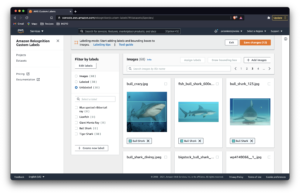

AWS Amazon Rekognition Custom Labels

Amazon offers recognition of objects with custom labels. It is interesting because there can be multiple objects on the one picture. Preparing data set for that takes more time as user needs to assign labels and draw bounding boxes on every picture. Teaching the model may cost per hour so I tried it out only with two species and 30 pictures per fish, small data set made model accurate for 0.40.

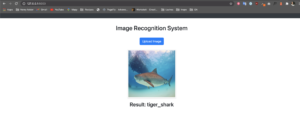

Web Based Image Recognition

Using open source code to create a website where user can upload a picture and it is recognised, the code is based on Keras and Flask. As an example I picked a Tiger Shark.

Questions that appeared

- What if pictures are low quality? If diver does not have good equipment, pictures might be too dark, too blue. Image recognition might be more difficult.

- What if pictures are from different angle? For example, some sharks could be easily mistaken if the pictures shows them from the front side.

- How to create big data set? Pictures on Google still needs to be verified – for example, they present Tiger Shark as Bull Shark. Is accessing pictures on Instagram possible (by using hashtag, e.g. there are 147k posts with the tag #tigershark)?

Retrieving data

There are 6,3 mln pictures with #scubadiving hashtag on Instagram. On Facebook there are groups such as Wetpixel Underwater Photography with 37,9k users and 1188 posts last month. Unfortunately Graph API does not allow mass scrapping – to do that it is needed to use a residental proxy, then website would not block me.

Instagram Scrapping Experiment

I found an open source code that allows to download posts by #hashtag.

Since 2021, Instagram changed rules and mass scrapping is problematic. To do that, you always need to use proxy. I scrapped some posts tagged with #tigershark hashtag.

–

Data from Instagram must be verified before teaching the model. Many posts actually depicts the Tiger Shark, however some shows also: White Tip Shark, Great White Shark, tiger, drawings or another not related objects. Another major problem with Instagram and Facebook are: lack of specific location (e.g. island name instead of divespot / geographical data) and date when the picture was actually taken. Flickr include that data, however, not many divers use it.

Databases

I was looking for databases of marine species. There couple of these, for example World Register of Marine Species. WoRMS has huge amount of verified marine species. Unfortunately, there are not many pictures of a specific animal (maximum couple), many of pictures are also low quality, there are even scanned postage stamps.

I found interesting dataset coming from two aquariums in USA and consists of 638 images with 4817 bounding boxes (Aquarium Object Detection 4817 Bounding Boxes, 2020). It is general regarding to species, however could help me with teaching the model.

Interesting projects

Using computer vision to protect endangered species on safari

Kasim Rafiq, a conservationist and National Geographic Explorer found out that pictures taken by tourists may help with counting African predators. Computer vision and machine learning is a solution that saves 95% of the costs of other monitoring methods. They use cameras mounted on cars to detect and photograph animals on safari tours, collected data is further use for species surveys (Nelson, 2020).

Using computer vision to count fish populations

Jamie Shaffer, data scientist from Washington state used object detection to collect data on salmon population in the Pacific Northwest. She prepared 317 images for deep learning and used YOLO v5 training model to detect fish. Jamie learnt from the project that pictures with excellent lightening are required to get best results (Nelson, 2020a).

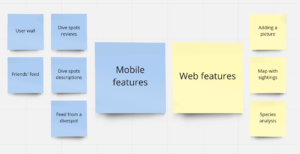

Solution

I would like to create an app for divers that allows to gather and discuss their experiences from dives. Collected data would be processed by AI to recognise the species from pictures, output could be used by scientists, management and government to take an action in endangered places where changes were noticed. The app should work on both platforms – web and mobile as divers. may seek for information during travelling.

I conducted an interview with couple of divers, asking what kind of features could appear in such an app. Some that were mentioned:

- Point system for pictures taken

- Adding people from particular dive

- Base of species

- Information about dive spots

- Log book

- Dive spot feed

- Current problems in particular place

Duikersgids

Duikersgids provide an app consisting of all the dive spots in the Netherlands. It is possible to log every dive, retrieve detailed information about the dive spot, check others’ logs, access map of the place etc.

RedPROMAR

It is a tool created by the Government of the Canary Islands for monitoring marine life. “This is an information system that registers the continuous changes that are occurring in our oceans” (Red PROMAR, n.d.). RedPROMAR is a software closest to my idea, I am currently talking with one of the creators about possibilities to receive a feedback or collaborate. The app consists of reported species, species guide where divers can match what they have seen during the dive, alerts about conditions of the coasts, events and ranking of most active users.

Further steps and Conclusion

- Conducting a survey with divers.

- Conducting interviews with marine scientists.

- Getting a data set of pictures from divers.

- Creating a clickable prototype in order to test it with divers.

- Creating prototype of data visualisation.

I was looking for different solutions to display geographical data to map the reports.

Data set with longitude, latitude, date and recognised species would allow me to display the sightings.

App seems to be complex solution, however, it adds ecological purpose to recreational diving. Community of divers is big and might play a role in saving underwater life.

References

Facts and figures on marine biodiversity | United Nations Educational, Scientific and Cultural Organization. (n.d.). UNESCO. http://www.unesco.org/new/en/natural-sciences/ioc-oceans/focus-areas/rio-20-ocean/blueprint-for-the-future-we-want/marine-biodiversity/facts-and-figures-on-marine-biodiversity/

The World Wide Fund for Nature. (2015). Living Blue Planet Report Species, habitats and human well-being. http://ocean.panda.org.s3.amazonaws.com/media/Living_Blue_Planet_Report_2015_08_31.pdf

PADI. (2019). 2019 Worldwide Corporate Statistics. https://www.padi.com/sites/default/files/documents/2019-02/2019%20PADI%20Worldwide%20Statistics.pdf

Reef monitoring sampling methods | AIMS. (n.d.). Australian Government. https://www.aims.gov.au/docs/research/monitoring/reef/sampling-methods.html

2020 Lap of Australia. (2020, March 16). Reef Life Survey. https://reeflifesurvey.com/lap-of-aus-tracking/

Aquarium Object Detection 4817 bounding boxes. (2020, November 25). Kaggle. https://www.kaggle.com/paulrohan2020/aquarium-object-detection-4817-bounding-boxes?select=README.dataset.txt

Nelson, J. (2020, November 2). How This Fulbright Scholar is Using Computer Vision to Protect Endangered Species. Roboflow Blog. https://blog.roboflow.com/how-this-fulbright-scholar-is-using-computer-vision-to/

Nelson, J. (2020a, October 5). Using Computer Vision to Count Fish Populations (and Monitor Environmental Health). Roboflow Blog. https://blog.roboflow.com/using-computer-vision-to-count-fish-populations/

Red PROMAR. (n.d.). RedPROMAR. https://redpromar.org/