Premise

Giving in-app feedback to services/products/functionalities using computer vision.

Synopsis

All product owners, companies want to improve the services/products they offer. They want to know what the users think of the products, and one way of doing this is by asking for direct feedback. Companies do use the implicit user data, i.e., the time spend on the app, time spent on a particular feature, looking at certain products/services, how many times they visit the application/website, etc. Nevertheless, the active user feedback is important as it represents the true thoughts of the user.

Scope of the research. Literature insights

Users’ in-app feedback should be evaluated as a lifelong process for any product/service the app offers, and giving users an active role makes change and adaptation more transparent (Almaliki, Ncube, & Ali, 2014). Users should be able to give feedback whenever they wish to give it, on the go, with different ways of capturing the feedback (Seyff, Ollmann, & Bortenschlager, 2014). A long term app engagement requires intuitive interface, good performance, sufficient and adequate features, and others (Ter-Tovmasyan, Scherr, & Elberzhager, 2021), and in-app feedback is a way to test these.

Face recognition tools are based on the features and expressions of the face (Feng et al., 2021). Human facial expression represent the signal that expresses a message and that is observed. The facial movements change according to the mood and are corresponding to the expression (Martinez, B., & Valstar, 2016). Automatic facial recognition has been used before in studies of human emotional expression (Fragopanagos & Taylor, 2005), but not in giving in-app feedback.

In-app feedback activity can occur both at predefined app conditions, also called pull feedback, and at users requests (push feedback) (Ter-Tovmasyan, Scherr, & Elberzhager, 2021). The pull feedback can be triggered by restarting the app after a crash, by a certain feature, by reaching a goal, and many others. What studies have concluded is that for the push feedback, the fewer the steps and the simpler the process, the more likely the users are to go through with it, and the way feedback is initiated is important (Almaliki, Ncube, & Ali, 2014). User’s motivation is heavily dependent on them recognising that the system is improved because of their feedback (Ter-Tovmasyan, Scherr, & Elberzhager, 2021).

First iteration

There is a gap in research with regards to giving in-app feedback using a computer vision machine learning model, more specifically giving feedback using the camera of the phone. This technology has the potential to be used in many mobile applications, as it could be a very easy and quick way to provide feedback on a service/product/tool with facial expressions. The purpose of this project is to build an add-on or plug-in tool that companies can install within their app and that can be used for any service or product. Voluntary human feedback should be honest and can be beneficial for companies.

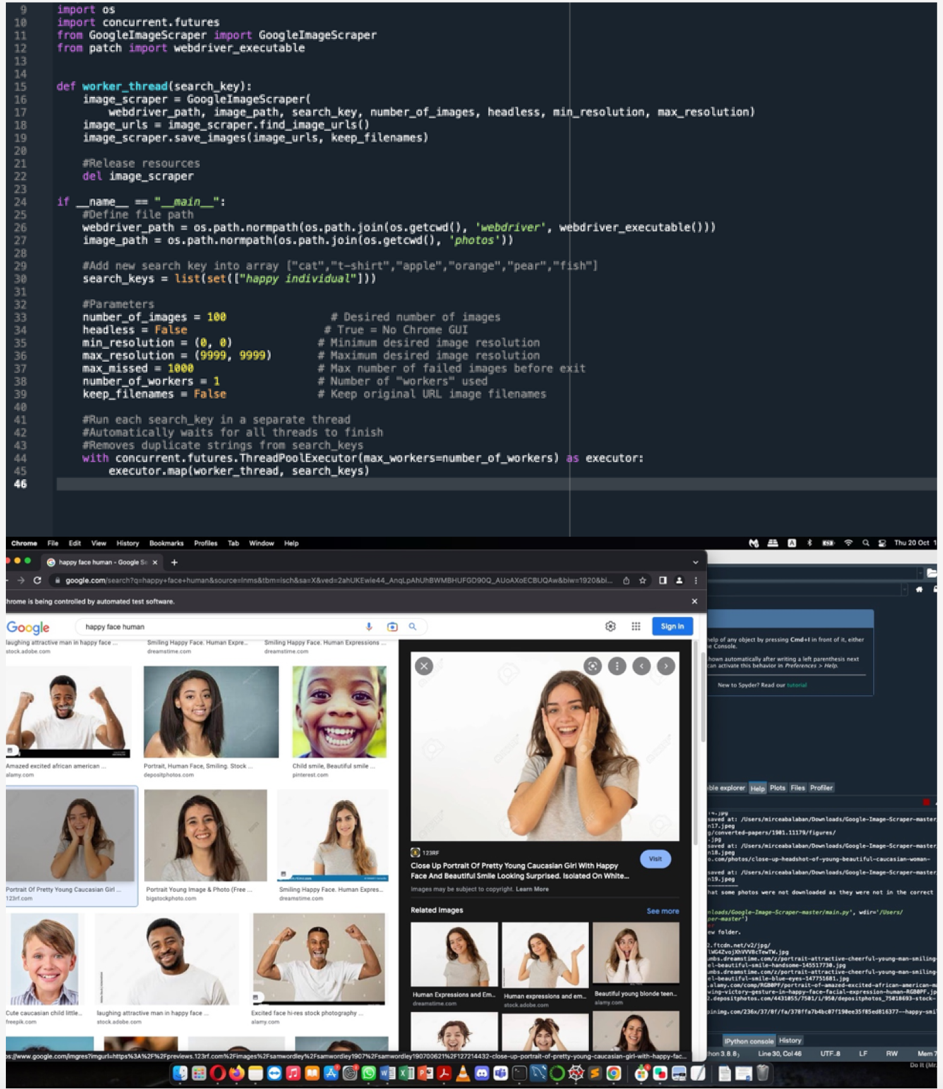

An object recognition tool uses computer vision (CV) which uses machine learning (ML) algorithms and deep learning. This tool can be trained to differentiate between classes with which it was trained. For this project I decided to try ML tools which do not require code, and from the available options I tried Lobe and Teachable Machine. In order to have more images and not waste time downloading them one by one, I used a web scraper to download them from Google images (Github, 2020).

Figure 1. The Google Images Scraper

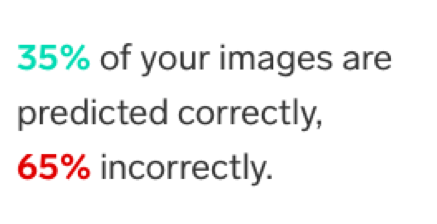

I first started with Lobe. After training two categories (‘happy’ and ‘sad’) with a few images in the beginning, I then uploaded all the pictures I have scraped. The percentage of accuracy was very low, so I started cleaning the photos, deleting the ones with a lot of noise. As seen below, the accuracy remained low.

Figure 2,3. LOBE accuracy before and after uploading and cleaning approximately 400 images

After failing to improve the accuracy, I decided to try the Teachable Machine as well to see if it classifies the images better.

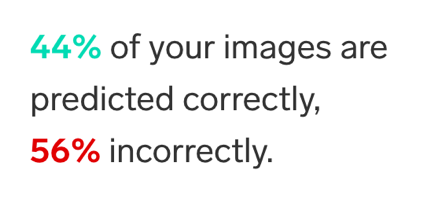

Figure 4. Teachable Machine ML

Teachable machine works better at recognising the human emotions. I decided to use “happy” and “sad” as emotions, which would act as the usual ‘like/unlike’ buttons.

I designed a prototype on Figma to show users how such a tool would look like.

Figure 5. Figma design

After training the model and creating the front-end, I have tested the ML model and the design with 3 users to receive feedback on the idea of the project, on the design, and on the model itself.

The insights:

- The model needs to be improved, upload more images with more lighting and more angles.

- Giving feedback should be quick and efficient and that there should be fewer steps that lead to giving feedback.

- In order to make it more efficient, a small feedback button should be placed in a corner with small text that indicates what the button does.

Second iteration

Based on the insights from the first user testing, the ML model needed improvement, therefore I scraped more images, but also cleaned the ones I already had in order to ensure that the model is trained properly. I also improved the feedback button adding text next to it and making it more suggestive. Moreover, as seen in Fig. 7 the screen where users use their facial expressions pops-up directly after pressing the button.

Figure 6. Improved feedback button (left)

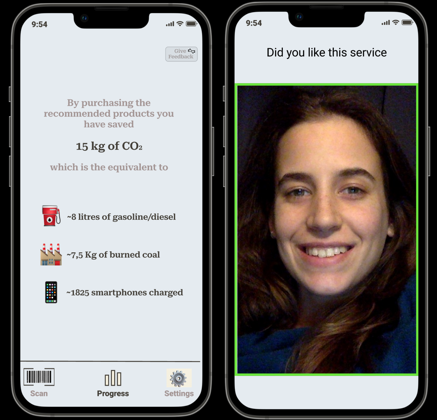

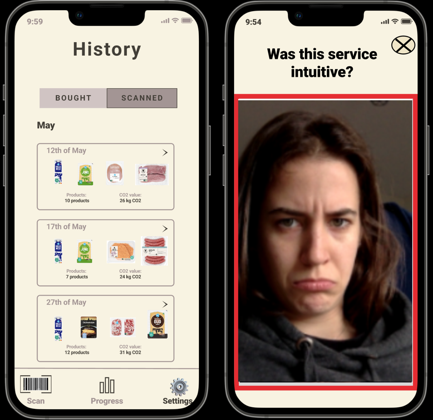

Based on the literature, there are two types of feedback – push and pull. I decided to test both types and see what the users preferred.

Figure 7. Push and pull feedback

After improving the model and the design, I tested the same 3 users, and the insights were:

- The ML model is improved

- The feedback widget is better now, but it could still be bigger and clearer.

- One user mentioned that the app should have more suggestive indication of what exactly they are supposed to do after pressing the feedback button, for instance write somewhere that the app will open the camera, and that they should give feedback using facial expressions.

- One user said they liked the pop-up feedback option as it is the only way they would actually give feedback to a service if it is quick and it doesn’t involve much effort. The other users said that they would be annoyed to receive them, even though they understand that they would only appear in certain situations (for a new service).

- One user mentioned that making the thumbs up/down motion in front of the camera could be easier to train and could be more intuitive. Moreover, the feedback icon has thumbs up/down, so it would make sense.

Third iteration

Based on the second iteration insights, I built another ML model that recognises the thumbs up/down motion using the camera. Initially I trained the model with a few images and saw that it was accurate from the beginning. However, in order to improve the model, I uploaded more scraped images.

Figure 8. Training the thumbs up/down ML model

I created a more suggestive design that users could understand better when giving feedback using the camera. After pressing the feedback button, the screen on the left appears (Fig. 9) which has a count-down function and it should prepare the user to give the preferred feedback.

Figure 9. Give feedback screens

Figure 10. Feedback button designs

The insights:

- When asked which model they preferred, the users said that the thumbs up/down motion makes more sense to give feedback, however it involves a movement of the arm which can be inconvenient (and that pressing a thumbs up/down feedback button requires the same amount of effort). In contrast, giving feedback with the facial features does not involve any other part of the body, hence it would make it easier. The general opinion was that both ways should be tested further.

- The emotion recognition ML tools was much improved and the thumbs up/down ML was quite good from the beginning, but it could be improved.

- Out of the feedback buttons, the users like the 2nd and the 3rd from Fig. 10.

Conclusion and future research

My idea of the tool is to improve the way users give feedback in order for them to actually take the time to do it. Even though some app creators and owners have analytics data about how much and when users spent time on certain features of apps, active user feedback is more honest. Many apps have feedback features but most of the time their appear when the user least expects and wants it. Future research should test and implement better ML models and should test more the push/pull feedback types. Moreover, the age and skills of users should be taken into account. With the analytics data, app developers could find the ideal time to deploy pop-up windows and test it with users. Future researchers should also focus on implementing a way for users to explain why they didn’t like the service.

References

Andreoletti, D., Luceri, L., Peternier, A., Leidi, T., & Giordano, S. (2021, September 26). The Virtual Emotion Loop: Towards Emotion-Driven Product Design via Virtual Reality. Annals of Computer Science and Information Systems. https://doi.org/10.15439/2021f120

Ter-Tovmasyan, M., Scherr, S. A., & Elberzhager, F. (2021). Requirements for In-App Feedback Tools – a Mapping Study. Procedia Computer Science, 191, 111–118. https://doi.org/10.1016/j.procs.2021.07.017

Almaliki, M., Ncube, C., & Ali, R. (2014b). The design of adaptive acquisition of users feedback: An empirical study. 2014 IEEE Eighth International Conference on Research Challenges in Information Science (RCIS). https://doi.org/10.1109/rcis.2014.6861076

Martinez, B., & Valstar, M. F. (2016). Advances, Challenges, and Opportunities in Automatic Facial Expression Recognition. Advances in Face Detection and Facial Image Analysis, 63–100. https://doi.org/10.1007/978-3-319-25958-1_4

GitHub – ohyicong/Google-Image-Scraper: A library to scrap google images. (2020). GitHub. https://github.com/ohyicong/Google-Image-Scraper

Seyff, N., Ollmann, G., & Bortenschlager, M. (2014). AppEcho: a user-driven, in situ feedback approach for mobile platforms and applications. Proceedings of the 1st International Conference on Mobile Software Engineering and Systems – MOBILESoft 2014. https://doi.org/10.1145/2593902.2593927

Fragopanagos, N., & Taylor, J. (2005, May). Emotion recognition in human–computer interaction. Neural Networks, 18(4), 389–405. https://doi.org/10.1016/j.neunet.2005.03.006

Feng, L., Wang, J., Ding, C., Chen, Y., & Xie, T. (2021, February 1). Research on the Feedback System of Face Recognition Based on Artificial Intelligence Applied to Intelligent Chip. Journal of Physics: Conference Series, 1744(3), 032162. https://doi.org/10.1088/1742-6596/1744/3/032162