Micha Tielemans (1792593) – State of the Art Technology – Project Diary (Resit)

PREMISE

“Using computer vision to make the process of keeping track of grocery inventory easier and more insightful to assist with reducing household food waste.”

SYNOPSIS

People should be more aware of the consequences of food waste and how consumers contribute to this problem. Household food waste causes environmental, social, and economic problems and is caused by consumer behavior. Consumers are more likely to change their behavior if all the processes for reducing food waste would be made as convenient as possible. This could be achieved with the help of computer vision technology. Through making several rounds of iterations and user evaluation, this project aims to reduce household food waste.

SUBSTANTIATION

Food waste is a global problem where presumably one-third of food production, equalling a total amount of 1.3 billion tons of food per year, is lost or wasted (Schanes et al., 2018). Food waste is referred to as wasted or lost edible products for human consumption (Halloran et al., 2014). Disposal of food waste and activities surrounding food production contributes to the emission of Green House Gasses. Intensive agricultural activities result in natural resource depletion in the areas used for agriculture (Girotto et al., 2015). Medium- and High-income countries commonly tend to discard food while it is still edible. Food is thrown away by either retailers or consumers and will add to the numbers of food waste (Alexander et al., 2013).

The focus of this project is household food waste. An estimated 35 percent of food waste is generated on the household level, therefore contributing a substantial fraction of the total generated food waste (Chalak et al., 2016). Contributing to this is low public awareness of the negative impact and their contribution to food waste. Purchasing too much, not planning meals, not carrying out a food inventory before shopping, and throwing away food that has passed the expiration date result in household food waste (Graham-Rowe et al., 2014). The significance of reducing the inconvenience of food management needs to be addressed when aiming to combat negative consumer behavior regarding food waste (Teng et al., 2021).

Reducing inconvenience to change consumer behavior and reduce food waste can be achieved using computer vision. Computer vision detects visual objects in digital imagery and assigns them to a certain class (Zou et al., 2023). This could be done for classes of groceries. Computer vision is already successfully implemented in the food industry, which ranks in the top 10 industries that use this technology. It is proven to be useful in measuring the quality of agricultural and food products in a consistent and accurate manner and is faster than human inspection (Brosnan & Sun, 2004). The use of computer vision for a food inventory management system has been integrated into new technologies such as smart fridges (Lee et al., 2021).

This project aims to discover a computer vision-aided prototype, which helps consumers to carry out food inventory and remind them to cook food before the expiration date. The digital journey is focused on discovering several computer vision tools and programs.

PROJECT OVERVIEW

Iteration 1: Lobe AI & Teachable Machine

The purpose of the first iteration is to explore the technique of computer vision. I found two tools: Lobe and Teachable Machine. Both tools are meant to for machine learning and can be trained with a dataset. I found the Freiburg Groceries Dataset and which consists of 5000 images from 25 different classes of groceries.

Both tools were trained with the complete dataset and experienced difficulties. Teachable machine crashed on all attempts and Lobe had a very low correct prediction percentage of 22% and struggled with identifying products.

Figure 1 Lobe AI test

I explored if both programs would perform better with a selected number of categories and that returned great results. Teachable machine performed significantly better. This could be because the program uses multiple epochs. Epochs are the number of complete passes through a dataset. (Brownlee, 2018).

Figure 2 Teachable Machine test

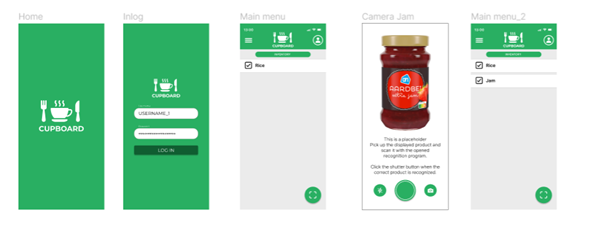

The use of Figma for user testing was necessary so that users could get a better insight into the intended purpose of the application and the technology.

Figure 3 Figma iteration 1 (Link)

The visualization of the output from teachable machine was very helpful, so I decided to combine it with the Figma model for user tests.

Figure 4 User test (Figma & Teachable Machine)

Insights

Prototype

- A large dataset will impact the computer vision application. Since I intend to recognize all groceries, a different form of image recognition needs to be explored.

User test

- The food items need to be more specified, the terms for groceries are too general for an inventory system.

- The prototype implies taking pictures of the products. Participants feel like this would take time and storage. Scanning products, like a QR code in the camera function with a pop-up, would be preferred.

Iteration 2: Barcode scanner

Barcodes are used in products for identification and inventory control purposes (Youssef & Salem, 2007) and provide a solution for us to explore using computer vision to recognize products. Most mobile phones support the recognition of symbols like EAN barcodes in their camera (Ohbuchi et al., 2004). Barcode scanners detect the boundaries of the dark and light areas to identify products (Shellhammer et al., 1999).

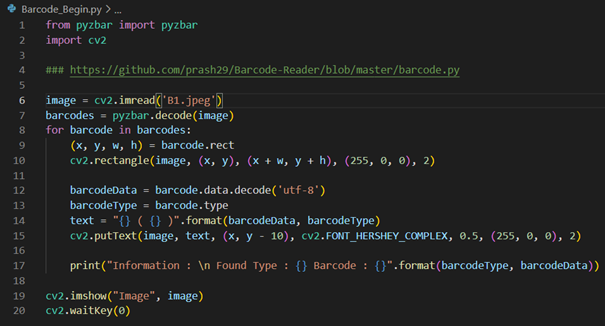

We used to Python library Pyzbar and OpenCV to recognize the specific barcodes, form photos of products.

Figure 5 Output Code barcode scanner

Figure 6 Output Code barcode scanner

The online products database Open Food Facts lets us the barcode number and returns information about the product. I want to use the name, brand name, and het quantity of each product.

Figure 7 Output barcode products

All the feedback collected from the previous round of user testing has been incorporated into Figma. A video with a pop-up is displayed to emulate the process of scanning the barcode.

Figure 8 Figma iteration 2 (Link)

This prototype is used for the next round of user testing.

Figure 9

Insights

Prototype

- Scanning barcode feels familiar and easy to understand.

- Using computer vision for scanning barcodes gives a better output instead.

User test

- Users doubts if the technology would output the correct product every time. They want the option to manually change the product.

- To get a better overview, users want to separate the products between the places they are stored.

Iteration 3: Expiration date text recognition

Participants in the user testing expressed that adding the expiration date in the application could help to remember when to prepare meals with certain products. Optical Character Recognition (OCR) uses computer vision to translate printed text from images (Singh et al., 2012). We will use Tesseract, an open-source OCR engine (Smith, 2007), in python to extract text from the packaging.

I tested the code with the products, but it did not perform up to standards. Only one package returned visible text, including the expiration date.

Figure 10 Photo product expiration date cereal – Figure 11 Output photo product expiration date cereal

I tried other pictures with the text more centered, but it did not perform better. It recognize text from another product, but this was not usable.

Figure 12 Photo product expiration date latte – Figure 13 Output photo product expiration date latte

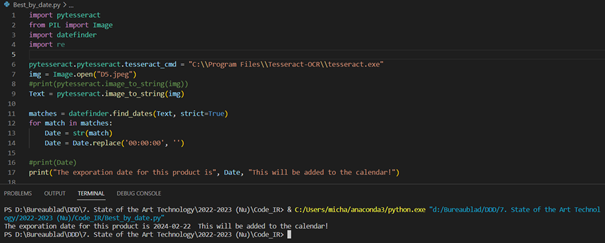

I cleaned the extracted text from the cereal and created an output of the expiration date.

Figure 14 Code & output expiration date

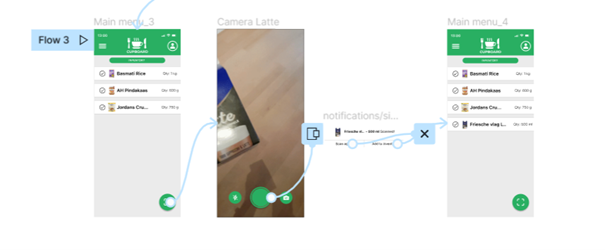

The flow of adding the expiration date and an overview of when groceries will expire are included in the final iteration of Figma and tested with users.

Figure 15 Figma iteration 3 (Link)

Figure 16 User test Figma iteration 3

Insights

Prototype

- The ability of character recognition needs to improve to be useable. It is possible to tweak some settings and improve the functionality.

User test

- Scanning every product could become exhausting. We could use the receipt and character recognition to import all the groceries in one go. Other ways to register all groceries in bulk should be explored.

- Users could see themself using this product and experiencing positive effects and reducing their food waste. They expressed concerns that they might not use it regularly.

- The overview of when products will expire was received with a lot of positive feedback. The help of warning messages that products will expire in the upcoming days could help users be more informed and use this information to reduce their food waste.

Conclusion & Future prototype

Computer vision can make it easier for households to track their grocery inventory and more insightfully help reduce household food waste. We can improve the OCR to better recognize expiration dates or use it as an option to extract data from receipts for ease of use. The creation of a functioning prototype will help with getting a better understanding of the intended results will be met.

REFERENCES

Alexander, C., Gregson, N., & Gille, Z. (2013). Food waste. The Handbook of Food Research, 1, 471–483.

Brosnan, T., & Sun, D.-W. (2004). Improving quality inspection of food products by computer vision––a review. Journal of Food Engineering, 61(1), 3–16. https://doi.org/10.1016/S0260-8774(03)00183-3

Brownlee, J. (2018). What is the Difference Between a Batch and an Epoch in a Neural Network. Machine Learning Mastery, 20.

Chalak, A., Abou-Daher, C., Chaaban, J., & Abiad, M. G. (2016). The global economic and regulatory determinants of household food waste generation: A cross-country analysis. Waste Management, 48, 418–422. https://doi.org/10.1016/j.wasman.2015.11.040

Girotto, F., Alibardi, L., & Cossu, R. (2015). Food waste generation and industrial uses: A review. Waste Management, 45, 32–41. https://doi.org/10.1016/j.wasman.2015.06.008

Graham-Rowe, E., Jessop, D. C., & Sparks, P. (2014). Identifying motivations and barriers to minimising household food waste. Resources, Conservation and Recycling, 84, 15–23. https://doi.org/10.1016/j.resconrec.2013.12.005

Halloran, A., Clement, J., Kornum, N., Bucatariu, C., & Magid, J. (2014). Addressing food waste reduction in Denmark. Food Policy, 49, 294–301. https://doi.org/10.1016/j.foodpol.2014.09.005

Lee, T.-H., Kang, S.-W., Kim, T., Kim, J.-S., & Lee, H.-J. (2021). Smart Refrigerator Inventory Management Using Convolutional Neural Networks. 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), 1–4. https://doi.org/10.1109/AICAS51828.2021.9458527

Ohbuchi, E., Hanaizumi, H., & Lim Ah Hock. (2004). Barcode Readers using the Camera Device in Mobile Phones. 2004 International Conference on Cyberworlds, 260–265. https://doi.org/10.1109/CW.2004.23

Schanes, K., Dobernig, K., & Gözet, B. (2018). Food waste matters—A systematic review of household food waste practices and their policy implications. Journal of Cleaner Production, 182, 978–991. https://doi.org/10.1016/j.jclepro.2018.02.030

Shellhammer, S. J., Goren, D. P., & Pavlidis, T. (1999). Novel signal-processing techniques in barcode scanning. IEEE Robotics & Automation Magazine, 6(1), 57–65. https://doi.org/10.1109/100.755815

Singh, A., Bacchuwar, K., & Bhasin, A. (2012). A Survey of OCR Applications. International Journal of Machine Learning and Computing, 314–318. https://doi.org/10.7763/IJMLC.2012.V2.137

Smith, R. (2007). An Overview of the Tesseract OCR Engine. Ninth International Conference on Document Analysis and Recognition (ICDAR 2007) Vol 2, 629–633. https://doi.org/10.1109/ICDAR.2007.4376991

Teng, C.-C., Chih, C., Yang, W.-J., & Chien, C.-H. (2021). Determinants and Prevention Strategies for Household Food Waste: An Exploratory Study in Taiwan. Foods, 10(10), Article 10. https://doi.org/10.3390/foods10102331

Youssef, S. M., & Salem, R. M. (2007). Automated barcode recognition for smart identification and inspection automation. Expert Systems with Applications, 33(4), 968–977. https://doi.org/10.1016/j.eswa.2006.07.013

Zou, Z., Chen, K., Shi, Z., Guo, Y., & Ye, J. (2023). Object Detection in 20 Years: A Survey (arXiv:1905.05055). arXiv. http://arxiv.org/abs/1905.05055