Premise

Using a facial recognition machine learning model users can give in-app feedback at the press of a button.

Synopsis

Little is known about what people think about a service or a product from a mobile app unless they express their opinion and in-app feedback is one way for people to do so. More often than not users are not motivated to give feedback. A quick and easy feedback solution was envisioned that is different from the conventional ways to give feedback for an app: a button through which users give quick feedback using their facial expressions.

Scope of the research. Literature insights

Users’ motivation to give feedback is dependent on them recognising that the system is improved because of their feedback. In order to give voluntary feedback, the process should be easy and require only a few steps (Almaliki, Ncube, & Ali, 2014). Almaliki, Ncube and Ali’s (2014) research on user feedback depicted several reasons as to why it is not given optimally and why users are not interested: lack of visibility, lack of usability and simplicity, being forced by the software, poor design.

When using an application, users expect the features, products, or services to be flawless and for the processes to run smoothly. Little effort to understand and navigate the application means that it is well designed and well explained (Violante et al., 2019). One way to achieve high quality services within an app is to measure user satisfaction through user in-app feedback. In-app feedback activity can occur both at predefined app conditions (pull feedback), and at users requests (push feedback) (Ter-Tovmasyan, Scherr, & Elberzhager, 2021). Users should be given a voice and could be given an active role in changing and adapting a feature by providing quick and easy in-app feedback which could be evaluated as a lifelong process (Almaliki, Ncube, & Ali, 2014).

Research show that emotion recognition tools have been used to determine the users’ satisfaction levels (Koonsanit & Nishiuchi, 2020). Such a tool that records the emotion of the user could help developers understand how users feel about a service. Users’ emotions are correlated to satisfaction levels about the services that a certain app has. The process of fully automated technologies based on computer vision algorithms has improved a lot in the past years making facial recognition tools and online coding libraries well documented, reliable and accessible (González-Rodríguez, Díaz-Fernández & Pacheco Gómez, 2020). Chang et al. (2019) suggested that facial expression recognition is a modern technology that can be used in playful setting that triggers the user to use it.

As with any technology, there are limitations and potential biases to consider. Using the tool in a responsible and ethical manner is very important and previous research suggests that security and privacy issues have not been addressed properly in the past years, and that more emphasis needs to be put on the privacy of the user (González-Rodríguez, Díaz-Fernández & Pacheco Gómez, 2020).

User testing preparation

The insights from the previous iterations indicate that the model needs improvement, and the user testing needed a clearer scope and clearer testing purpose.

Out of the two no code machine learning models I decided to explore further the Teachable Machine tool. In order to improve the model, I trained it with more pictures, both with scraped Google images and with images taken with the laptop camera. Firstly, I cleaned the Google images from noise such as hands or text, and secondly, I took many laptop photos from different angles, lighting, and depth in order to increase the diversity of the data. Moreover, I looked at the model’s hyper parameters and tweaked with them to see how the accuracy changes. The hyper-parameters for this model are batch (set of sample in one epoch of training), epoch (one iteration of training), and learning rate. In addition to that, I increased the diversity of training data and fine-tuned the model. A picture that was classified before with 65% in 🙁 class now shows a correct 🙁 accuracy of 81% (see Figure 1).

Figure 1. ML Model Result after Tweaking the Hyper-Parameters

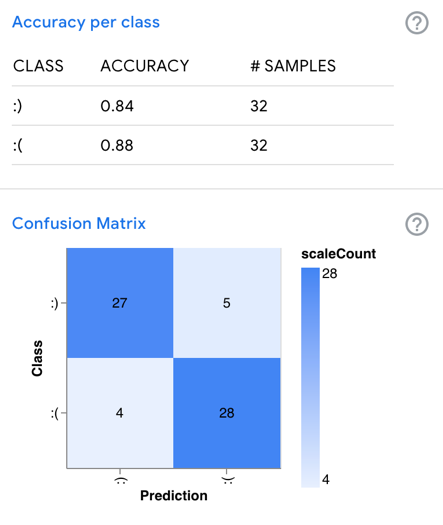

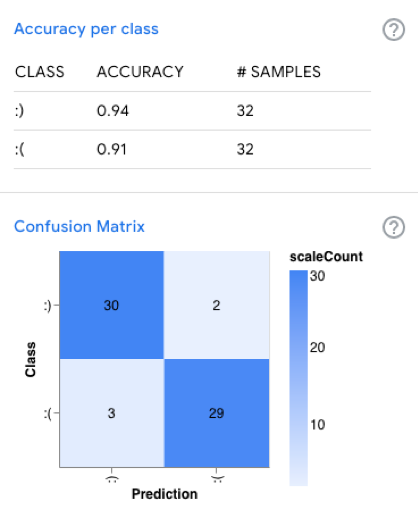

Figure 2. Accuracy and Confusion Matrix after Changing Hyper-Parameters

The technology used is quick, easy to use and accurate to classify the emotions. The technology is trained only on 🙂 and 🙁 emotions. The algorithm does not understand and cannot make a clear classification of a person with an angry or surprised face.

User testing and insights

The users were tested using the usability method for uncovering problems, discovering opportunities and learning about users. The scope is to provide users a quick and easy way to give feedback to products or services in an innovative and inclusive way and making feedback more visible and attractive to people. The target audience is younger people that are tech savvy, are interested in new technology, but are not very active in giving feedback.

The users were chosen on the basis that they do occasionally provide feedback, whether when prompted to, or from own initiative. The users were asked general questions about giving feedback, questions about the design and functionality of the feedback button and the feedback screen, and about giving feedback using facial expressions.

From a design point of view, the users chose the feedback button that clearly indicates what it does, that has text next to it and that is big enough to read clearly. They agreed the position was good and that the general design is pleasing. One user mentioned that if the button does not fit in with the rest of the design and looks more like an ad pop-up, she would more than likely not press it. With regards to the last screen from Figure 3, one user mentioned that the feedback feature is a good addition to rate recommendations.

Figure 3. Feedback Button Design

When asked about the design of the screen with the front camera, one user raised privacy and security concerns: the screen should have text somewhere mentioning what happens to the images captured. Privacy concerns are frequently mentioned in literature.

When asked if they give feedback, they said that usually they are motivated to give feedback when they really like or dislike a service or a product, as it is a good way of expressing how they feel about a service or a product; it’s a good way of measuring satisfaction. Moreover, this technology would prompt them to use it and give more feedback than they already do as it is quick, accessible and interesting. All of the users tested agreed that quick ways of giving feedback are the best ways, regardless if they are push or pull feedback. Overall the users said they would use this way of giving feedback and would probably give feedback more often. This technology is interesting and it’s a novel way to give feedback which might prompt more people to use it.

One user mentioned an interesting way to move forward, adding gamification elements in the shape of rewards as they use it already in other applications and find it motivating to use it more.

Conclusion and future research

This technology brings a novel way of giving feedback, it could motivate users to use the feature more often and give more feedback than they already do. Future research should emphasise on the ethical and legal aspects using the front camera has and implement it in an ethical way. Going further, I would implement a more sophisticated machine learning model, such as a Convolutional Neural Network (CNN) model which has been a prevalent image recognition technique (Abdullah et al., 2021). I would also add more emotions as variables. I would add gamification elements to it to make it more appealing to users and also to incentivise users to share it with friends. Giving rewards to users could retain them in the app for a longer period of time, and also could add publicity to them if they share it. I believe that the problem in question does not have a conclusive solution, it needs further testing and trying out different elements (such as the gamification). Another element to look into is making this feature accessible and inclusive to everybody.

References

Abdullah, S. M. S., & Abdulazeez, A. M. (2021). Facial Expression Recognition Based on Deep Learning Convolution Neural Network: A Review. Journal of Soft Computing and Data Mining, 2(1). https://doi.org/10.30880/jscdm.2021.02.01.006

Almaliki, M., Ncube, C., & Ali, R. (2014b). The design of adaptive acquisition of users feedback: An empirical study. 2014 IEEE Eighth International Conference on Research Challenges in Information Science (RCIS). https://doi.org/10.1109/rcis.2014.6861076

Chang, W. J., Schmelzer, M., Kopp, F., Hsu, C. H., Su, J. P., Chen, L. B., & Chen, M. C. (2019). A Deep Learning Facial Expression Recognition based Scoring System for Restaurants. 2019 International Conference on Artificial Intelligence in Information and Communication (ICAIIC). https://doi.org/10.1109/icaiic.2019.8668998

González-Rodríguez, M., Díaz-Fernández, M., & Pacheco Gómez, C. (2020). Facial-expression recognition: An emergent approach to the measurement of tourist satisfaction through emotions. Telematics and Informatics, 51, 101404. https://doi.org/10.1016/j.tele.2020.101404

Koonsanit, K., & Nishiuchi, N. (2020). Classification of User Satisfaction Using Facial Expression Recognition and Machine Learning. 2020 IEEE REGION 10 CONFERENCE (TENCON). https://doi.org/10.1109/tencon50793.2020.9293912

Ter-Tovmasyan, M., Scherr, S. A., & Elberzhager, F. (2021). Requirements for In-App Feedback Tools – a Mapping Study. Procedia Computer Science, 191, 111–118. https://doi.org/10.1016/j.procs.2021.07.017

Violante, M. G., Marcolin, F., Vezzetti, E., Ulrich, L., Billia, G., & Di Grazia, L. (2019). 3D Facial Expression Recognition for Defining Users’ Inner Requirements—An Emotional Design Case Study. Applied Sciences, 9(11), 2218. https://doi.org/10.3390/app9112218

Annex

User testing questions:

- Do you give feedback?

- What would make you press the feedback button?

- How do you feel about quick ways of giving feedback? (taking less than 10 seconds)

- Is this feature appealing to you?

- Would you use it more often? (especially for negative feedback) would you give feedback more often

- Is the button in a good position?

- Is the design of the feedback button pleasing? Do you see it easily?

- Does it fulfil its role, its functionality? (user friendly)

- Would you change something about the design of the front camera window when giving feedback>

- Would you give more feedback than you already do?

- Do you think that having a feedback button that is accessible and visible would incentivise more people to give feedback?

- Would this trigger you if there were rewards upon giving feedback?