Premise

Seeing someone similar and relatable makes people feel less alone and can lower the threshold for people to join the Ministry of Defense

Synopsis

I always wanted to join the Ministry of Defense, but I felt like there was a threshold and thus I didn’t join Defense.

It interested me that the self-proclaimed male-dominant culture held me back. I wanted to know if it was an actual male-dominant culture. This is when I found out that females are heavily underrepresented and that there aren’t even any numbers of how many different ethnicities or people from the LGBTQ community there are in Defense. Unfortunately, this wasn’t the only troubling thing I found out. Less than one of five employees would like to see more women in Defense. One in ten would support an increase in ethnic diversity and 6% think there is currently too few LGBTQ personnel. I want to give these minorities a role model to lower the threshold.

Introduction

Minority groups are generally often being excluded or/and even discriminated against. They are not being represented in all places for example the parliament or Ministries. Not everyone feels confident enough to even the playground and fight for their rightful place in the world because it is often accompanied by a fierce battle and losing hope. These fighters are wrongfully being discriminated against based on their appearance, gender, sexual preference, race, and so on. However, studies have shown that people often tend to follow people that are similar to them this is from early on. People want to be represented and so people crown the earlier mentioned confident fighters as role models.

In this project, I see if I can create a code that looks at similarities of someone’s appearance.

For the past few weeks, I looked at all the possibilities regarding facial recognition and specifically facial similarities. I did experiments with certain codes in certain python programs and with all kinds of software. Let’s say I had to pip install a lot. In the chapter “Experiments” you can find my learning process.

Experiments

I started this journey with some old fashion “Googling”. My search entries were: “face scan”, “TensorFlow”, “image recognition”, “image recognition system based on human features”. I with some exploratory research by reading articles and examples.

However, I couldn’t find what I was looking so my next step was DataCamp. This is an online platform designed to teach data science. I started the course “introduction to deep learning”. I thought if I broaden my coding skills I might create the prototype from scratch. This was wishful thinking and I discovered I was not able to do so in a short amount of time.

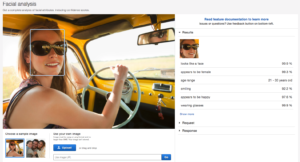

This is why I started looking into Amazon Rekognition. I found some demos in which the system recognizes a face from one picture in another picture.

The other demo analyzed the face based on gender, age, and emotions. It looked very promising. Nevertheless, the line between what is free and what is not was very unclear. To avoid a huge bill I started looking into other programs that I can use.

I returned to google and started searching for “Image recognition with face feature ” and added “similarities” to it. I turned to TensorFlow. I researched what it entails and the models they offer. I did however did not found anything useful at that point. This meant back to the drawing board.

I found an example code of a woman who created a code in Python in which she was able to use an image of a bag and the output were pictures of similar bags. This code with some of my adjustments can be found in the links underneath:

https://github.com/SophieK23/State-of-the-Art-Technology/blob/main/image%20detection.py

https://github.com/SophieK23/State-of-the-Art-Technology/blob/main/feature%20vectors.py

In this code, I use an image feature vector which is a list of numbers that represents a whole image. This is commonly used for image calculations or image classification tasks.

The image features are minor details of the image, think about lines, edges, corners, or dots. Whereas high-level features are further built upon the low-level features which detect objects and larger shapes.

In this code high-level features of a product, images are being extracted by using a pre-trained convolutional neural network named mobilenet_v2_140_224 that is stored in Tensorflow Hub. The MobilenetV2 is a simple neural network architecture fit for mobile and resource-constrained applications. This is relevant for my research.

In the link to the code, I explain the steps I took and what it does.

The code was fitting, but the model was trained for product images, not faces. This meant I needed another model and another code. Instead of trying to modify the code, which would take a lot of time since I do not have much experience, I decided to search for a code that is more suitable to my case.

During my search, I found the VGG (Visual Geometry Group) neural network models designed by researchers at Oxford. Besides its capability of classifying objects in photographs, is that the model weights are freely available and can be loaded and used in models and applications created by yourself. There are different kinds of VGG models I looked at the VGG16 and VGG14. I experienced that these are for product images as well or detecting objects this is why I moved to the VGG face weights model which is designed for facial recognition.

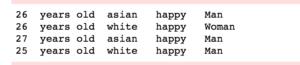

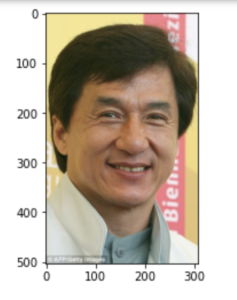

One of the options of the VGG model where analyzing photos based on age, race, emotions, and gender. I created this with Deep Face created by Facebook. However, the result was not good. Jackie Chan was estimated to be 26 years old which is not even half his age. The second picture was predicted nearly perfect. Megan Markle is predicted 10 years younger, the wrong gender, and her race is African American. In the last picture, the age and race were correctly predicted, but the gender was wrong. In my opinion, not a very good working and accurate code.

The reason behind looking into this analysis is that I could not find what I was looking for. I thought that if I retrieve these features I could create a function that has an output of an image that is similar to the input image.

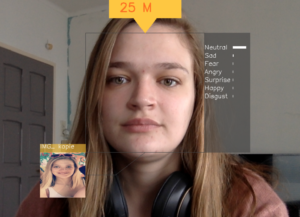

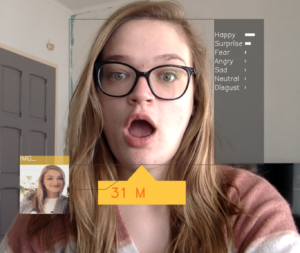

I looked further into the analysis of these features. I tried with DeepFace to create a live stream. The webcam of the laptop turned on after running the code every 10 seconds the live stream froze and an analysis of the frozen picture appeared. These results were however as disappointing as the last experiment. Let’s take a look at the results:

In my opinion, the images are quite similar, but still, the analyzed output is very different. In the first picture, the age prediction is 5 years off, the gender is correct and apparently, I showed fear in this image. In the second picture, everything was correct beside the gender.

In both pictures, the gender was predicted incorrectly. The first picture is closer to my age (25 years old). In the first image I try to look angry, but the prediction was happy. Whereas in the second image I look surprised which was predicted correctly.

Since the results were not as expected I did not try to create a function for this analysis, because they were not accurate enough. However, this was subsequently not surprising since in this code there was no pre-trained model used. I used OpenCV, but not a model that already is pre-trained. Although I do now realize this I did not when I was performing this test. This is why I classified this experiment as a failure and moved on.

When I started to lose hope I finally found someone who created a code to find look-a-like celebrities. This is exactly what I was looking for and started modifying it into my final prototype. The prototype is explained in this link. It also explains at what point it does not work and why.

Conclusion

It was a very interesting, frustrating, educational, and desperate journey. It was clear in the last three weeks that it was a too ambitious project for me. I have zero coding experience before starting this master’s. I knew it would be a challenge but I still underestimated it.

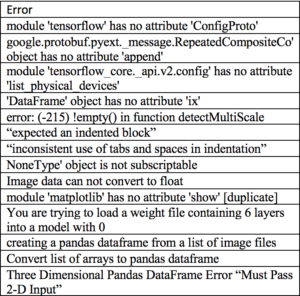

Looking back I did learn a lot about coding regarding all the different tools there are. The models, software, example codes it is amazing and helpful. However, I had to figure out what every line did which cost me a lot of time. It took me so much time to figure everything out, that I haven’t thought of all the possible errors I could come across. In the table underneath are some of these errors. Having a billion errors every day can break your spirit especially when you do not understand what is going on and what to do. Altogether I did learn a lot and made great steps in my coding experience. I certainly will proceed to master this and further develop the prototype.